Three-dimensional models are now widely used in the gaming and movie industries and one of the most common methods for creating them uses computational photography. This, the first of two articles, explains how it works.

As humans, we are all familiar with the concept of 3D through our own binocular vision. The eyes, our "sensors," view two slightly offset perspectives of the world in front of us and through some clever neural processing fuse the images together to create a perception of depth. It's a sophisticated adaptation of vision and incredibly useful!

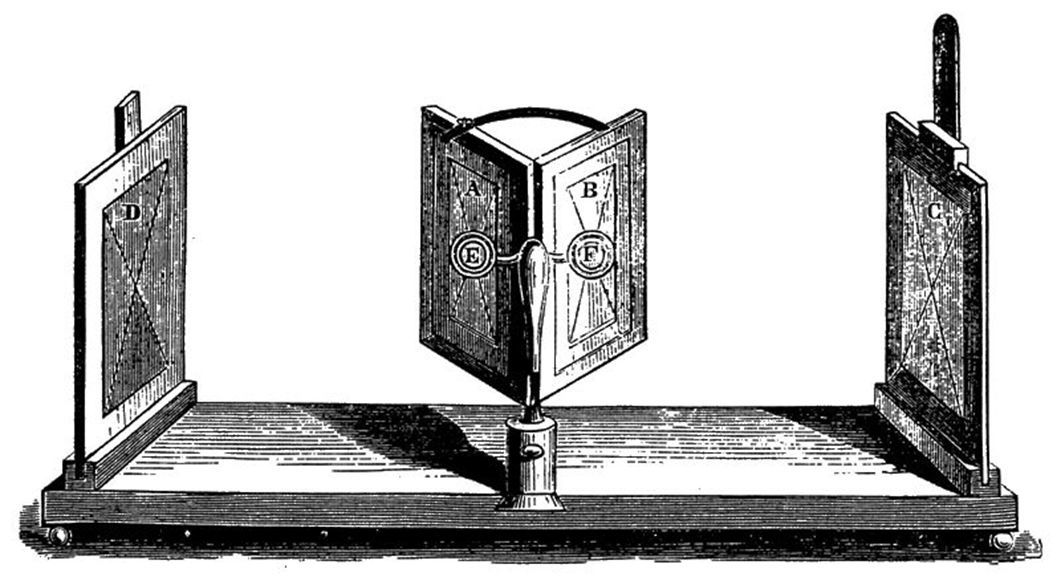

Our own familiarity with 3D vision will most likely have come from the movies through the use of polarised glasses, but if we stretch back a little further then the more "experienced" photographers may remember wearing glasses with red and blue lenses to view a dinosaur or shark in a kid's magazine. However, an understanding of binocular vision and exploiting this to view images in 3D - stereoscopy - goes back to Sir Charles Wheatstone in 1833 with his invention of the stereoscope.

Wheatstone's Stereoscope

Photography was the obvious companion for stereoscopy which was immensely popular with a Victorian society eager to consume new technologies. Brian May's (yes, that Brian May!) sumptuously illustrated photobook "A Village Lost and Found" is a prime example, showcasing T.R. Williams' wonderful stereophotos of an undisclosed village. May identifies the village as Hinton Waldrist in Oxfordshire, rephotographs the same scenes and includes a stereoscope (designed by him). Viewing examples such as this demonstrates that there is something magical about stereo vision - even now, with all our technology, viewing a static scene and being able to perceive depth is exciting. It's a window on "a world that was" and we view it as if we were actually there. However that static scene is also the principle limitation of stereo photos (and movies) - they are curated for us and we have no way of interacting with the 3D world we view. This is why virtual reality is thought to be the next game changer - and not just for interactive games, but interactive movies as well.

To move from a static to fully interactive view you need more than depth perception. You actually need a 3D replica of the real world which allows you to change viewpoint and move around. So how do you do this? One photographic technique called photogrammetry calculates the x, y, and z coordinates of points in photos, allowing you to view them stereoscopically. The traditional approach requires two overlapping photos with at least six points in the overlap which you already know the coordinates of. Using these known coordinates it is possible to back calculate the exact 3D position of the cameras when the photos were taken, as well as their orientation. With this known, you can form a baseline between the two camera positions and then create a triangle with your point of interest - once we have a triangle some simple trigonometry allows you to calculate the position of the feature.

High school trigonometry to calculate 3D models

This process is accurate but costly and time-consuming. Which is why a relatively new approach called "structure from motion" is so exciting. Instead of having two overlapping photos, we now take a large number of photos of our subject of interest - say 50. These can then be used in a two-stage process to calculate the position of points visible across the photos. The first stage, called the sparse matcher, tries to calculate the positions of all the cameras when the photos were taken. There are no known coordinates so the software relies upon finding exactly the same visible features across as many photos as possible - this allows it to estimate the most likely positions of the cameras and then refine them to an exact position. These features must be high contrast points and can run to thousands in each photo, which are then compared to thousands in every other photo - it's a massive computational task. This allows the calculation of the camera positions that are ready for stage two - the dense matcher. For all overlapping photos (now with known positions) a second search for high contrast points is undertaken but this is now much more detailed. With these located, the position of the feature is calculated which, when complete, leaves an interactive 3D world containing millions of points - a point cloud - each with its color taken from the photos. At a distance, it looks like a photo, but it is now interactive and if you zoom in, you will eventually be able to see all the individual points. It is quite amazing to be able to take a set of photos of an object, and 10 minutes later have a fully interactive 3D world generated from them.

Rushden Triangular Lodge in 3D

The generation of 3D features and landscapes using structure from motion has been nothing short of revolutionary; from crime scenes to real estate, landslide control, to archaeology, to movie making. They all need to reconstruct the 3D structure of the real world. And the flexibility of the approach means that you can group source photos from lots of people using different cameras. This also means that, with sufficient photos, it is possible to use historic images to reconstruct 3D scenes.

Two recent examples stand out. Mad Max: Fury Road (Oscar Nominated for Visual Effects) used photogrammetry for the creation of visual effects of the Citadel, photographing the Blue Mountains in Australia from a helicopter using a standard stills camera and then texturing the model and using it as a backdrop. They also reconstructed a number of the vehicles before modeling them in visual effects software. The advance of ISIS into Palmyra had a devastating effect with the destruction of many ancient monuments now permanently lost having previously survived for millennia. With such risk to much archaeology, Oxford's Institute of Digital Archaeology began distributing thousands of cameras to volunteers in Palmyra for their "Million Image Database" with the intention of using photogrammetry to reconstruct the buildings. The first fruits, a 3D printed Temple of Bel, was subsequently unveiled in Trafalgar Square, London, UK

Structure from motion offers an incredibly accessible way to recreate 3D features and landscapes using nothing but an ordinary digital camera (and a fast computer!). The accessibility of the technique and low barrier to entry makes it incredibly exciting for photographers. In the next article I'll cover the workflow for creating your own 3D models and presenting them on the web.

I do this quite frequently to create realatively accurate models of praying mantises. The process as noted above is astoundingly time consuming. The final product for me is a 3D print though and not an interactive model.

http://www.reagandpufall.com/remnants/

Wow, what an amazing model. THe benefit of a photogrammetric approach is there is considerable flexibility - if you use a synced multi-camera setup you capture an instant in time. Fantastic application

Nice article but it should mention Sketchfab - biggest 3D content repository that a lot of people use to share 3D models like these (and embed them in articles like you would do with a Youtube video)

https://sketchfab.com/

Good shout - I haven't covered moving beyond point clouds to 3D models. Thanks for highlighting