Lightroom is a notorious slow-poke, and higher-resolution cameras are even dragging down Photoshop. While there are plenty of software tweaks to eke out a few more percent here and there, want to know how you can actually kick your editing workflow into high gear?

A few months ago, I was dealing with all the usual software slowdowns in my photo editing workflow. It was partly because I’d just gotten an even higher-resolution camera and partly because I had some new client needs that meant shooting HDR brackets and more panoramas. Altogether, this meant heavier files and more of them. Everything from importing and rendering previews, to editing, to exporting the finished files was chugging along at a miserable pace. I spent a bit of time refining all the software settings I could, cleaning up drivers and nudging my overclock just a little higher, but it just wasn’t enough. It was finally that time: time for a hardware upgrade.

When you’re looking at upgrading your computer for a photography workflow, there are a few key things to consider. If you have a prebuilt computer from a manufacturer like Dell or a laptop, some of these upgrades may not be possible. You can probably replace the RAM with a higher capacity set of sticks if it isn’t soldered in or add a GPU via an external enclosure. For the best upgradability, consider building your own computer. It’s incredibly easy, provides the best value possible for your parts, and can be done in an hour or two (it’s also a great project to undertake if you’re still in a locked-down area). Also, some of these upgrades may trigger the need for other upgrades, like going to a new motherboard and DDR4 memory for some processors, or a higher-wattage PSU for a newer graphics card.

The other consideration is recognizing how you want to apportion your budget. Depending on where your workflow needs a speed boost, you can focus on just maxing out a part or spreading the benefits around to all aspects of your computer. In the individual part sections, I’ll try to indicate which aspects of the workflow they most impact.

Processor

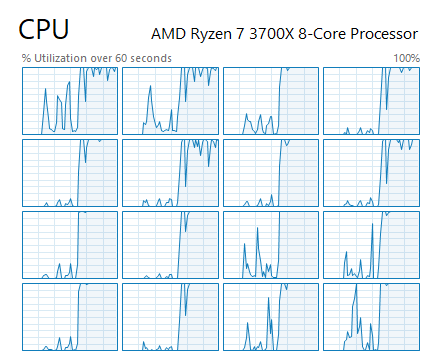

Your computer’s processor impacts every aspect of your workflow. From generating previews on import to rendering many of the filters and sliders effects to saving and exporting speeds, the processor plays a key role. For a number of years, there were only marginal gains from each new generation of processors, but I think that for many users, the time has come. If you’re on a 4th-, 5th-, or 6th-generation Intel chip, or AMD pre-Zen chip, even a mid-level processor and entry-level motherboard will offer a massive speed boost, as well as a host of convenient features. New boards have plenty of USB-3 ports, USB-C support, NVME storage, and more, expanding the benefits beyond just a raw speed advantage.

Some tasks, like exporting from Lightroom, can take advantage of 8 or more cores for a huge improvement in speed.

Some of my favorite picks for processors include the 3700X, 3900X, and i9-10900K. Each offers a substantial core count increase over old four-core CPUs and a big boost to IPC. What this means in plain English is the ability to do more work at once and do it faster. While many photo workflows don’t parallelize well across many cores, software support has been getting better in this area. With that in mind, don’t feel like you need to over-buy if you’re just doing photo work. The more expensive options offer only slight improvements for a purely-photo based workflow. If you find that you’re doing video work, however, they can offer much more benefit.

For 90% of users, the 3700X is the sweet spot of performance and value, outperforming chips that cost multiples of its price on Puget’s benchmarks for Lightroom and PS. Of course, the 3900X is all that goodness and more, but at a higher price. If you’re upgrading, don’t forget to budget the cost of an AM4 motherboard and potentially DDR4 RAM if you’re coming from an older system — one reason to consider the 3700X more closely.

For the top of the Photoshop workflow, Intel’s i9-10900K is king, but as it loses to the 3900X in both price and Lightroom performance according to Puget’s benchmarks, you have to consider what your workflow looks like.

Memory

A memory upgrade is one of the easiest options, making it a perfect way to get comfortable working on your computer. If you have less than 8 GB of memory, place your order today. RAM prices have fallen to the point where I’d consider 16 GB the minimum for a desktop. While you can go to 32 GB or 64 GB easily, this is definitely one area that’s affected by workflow. Big data sets, like stacking, panorama stitching, and heavily layered documents will all benefit from more RAM, but if you’re keeping things simple, 16 GB should be fine.

The RGB lighting isn't necessary, but having enough capacity certainly is.

If you’re upgrading your processor, you’ll need to consider the generation and speed. DDR4 is the newer standard, meaning your older DDR3 sticks can’t be carried forward. Speed is also important, especially for AMD processors. Owing to their architecture, you’ll want to get at least 3,200 MHz speeds, with 3,600 MHz preferred (it’s more expensive, of course).

For RAM, a broad recommendation is difficult. Consider your budget, size, and speed needs, then take a look at what’s in stock. The actual RAM chips all come from a few fabs, so the actual brand on the box is more about aesthetics and warranty than actual performance differences (assuming identical speeds without overclocking).

Storage Drives

Remember the mention of NVME a while back? That’s an interface for SSDs that will truly change how you work with Lightroom. Consider the differences between 4 storage setups: a single hard drive, a pair of RAID 0 drives, a SATA SSD, and an NVME SSD. A single drive will be around 200 MB/s, with the pair of drives sitting a bit faster. A SATA SSD can effectively max out the SATA port it’s connected to at 550 MB/s. All of that pales in comparison to a basic NVME SSD, which can do over 3,000 MB/s.

Now, raw speed isn’t everything, but in every aspect other than $/GB, an SSD will offer more performance and reliability. The best part of using one of these SSDs as your boot drive is a performance boost across everything you do on your computer. While SSD storage has gotten cheaper, it’s still cost-prohibitive to store huge media libraries on it. Instead, consider setting up some storage tiers. With a 1 TB SSD, you can have your OS, programs, and a year or two of recent images. From there, you can back up those contents to a pair of large hard drives for cheap, failure tolerant, bulk storage.

My picks for SSD are the Inland Premium and WD Black SN750. For hard drives, I’ve had good luck with Western Digital 8 TB. 8 TB and higher Red drives are free from their slow SMR technology and represent a good intersection between performance and price.

Header image courtesy of SpaceX.

Personally I am rocking an i7 9700k, 32gb of 3600mhz ram, a GeForce 2070 RTX, and an NVME SSD that benchmarks at about 2.5gb/s and yet LR and PS still run horribly for me. Adobe really needs to shape up their software, it is slower for me to work on image files now than half a decade ago. (and my machine specs are obviously much more powerful now)

It is easier for me to edit 4k footage in DaVinci than it is to edit a 24mp raw file in PS.

Ryan Cooper I completely agree! I have issues with Adobe software all the time and it drives me insane. I have a pretty decent iMac and Macbook Pro, but often find myself frustrated with Photoshop either crashing or giving me a spinning wheel. Every new Adobe release is different of course. I quit using Premiere altogether in favor of Final Cut Pro because a few versions back, I couldn't even playback low res proxy video within Premiere in order to cut a video together. I've had much better and reliable performance from Final Cut. I can't really leave LR or PS though and honestly don't want to. I just want better performance and reliability.

That being said, I have found a pretty fast way to work in Photoshop and despite the shortcomings of Photoshop's bugs (with seemingly every release), I've discovered a much faster workflow for the majority of my work. Check this out: https://fstoppers.com/originals/photoshops-hidden-gem-revealed-how-save-...

I guess you might have to buy an ARM computer from Apple in the future because I guess by changing the architecture Adobe will be forced to rewrite the code for those programs sooner or later.

They might even take the code from the iPad and port it to the ARM macs.

At least this is my hope.

I think Apple is providing a way (Rosetta 2) to port to ARM. If that's the case, Adobe may not have to rewrite much, if at all. But, it would definitely be a good thing if that fixes Adobe's laziness.

That is true but Rosetta 2 is just the "bridge technology". Sooner or later they have to adapt it natively and in the long run they cannot use the old code. At least that is my hope.

And - although I don't know about that - I would think it might be easier to use the iPad code and adapt it than the intel-code written version. iPad apps run natively on Big Sur. So this might be an avenue. But we will see.

They'll just discontinue LR Classic and only keep developing the mobile/tablet version.

Well. I would not care which version it is that they use for ARM as long as all the functions are the same. Right now LR CC is not on par with LR Classic. But if that is the case I don't care what it is called as long as it is running fast.

Given Apple's track record of making awesome portable devices using their own chips could only means great things for the Mac side of things. I think ARM Macs will function smoother, have much more processing power and give professional users a better overall experience when it comes to handling large files.

My hope is that Adobe will start rolling out less buggy software as well as start taking advantage of this new hardware by the time the new ARM Macs are released. In my personal experience, I believe a lot of performance issues from Adobe products have been due to the fact that they aren't always necessarily optimized for the hardware that they are running on. But I'm also not a developer so....

LR does a bad job of utilizing the hardware you give it, but the results are still better than if you were running worse hardware. Definitely agree that there's a lot to be done on the software side of things.

Same hardware except I have "just" a 1060 GPU, and LR is horrendously slow. I went the Resolve way a couple of years ago, goodbye Premiere. Now the only next step is to go to Capture One, which I'll do next time there's a sale.

With the spec you lost PS at least must be fast. If that is not the case I would recommend you look at:

1. PS scratch disks size and location

2. Is PS actually using your GPU card?

3. Have you tuned your BIOS? if so reset it to defaults and run again

4. Copy a BIG file ie. 32GB in size (bigger than your ram so no caching can help the system and see if the I/O stays constant or if it tanks after a few GB has been copied which points to the SSD being thrashed and

some SSD's then drop down to ~200MB/s thruput

5.Check how much RAM is actually free in task manager

I had an Adobe Tech spend 3 hours in a screen share trying to make it better, at the end of it all their statement was along the lines of: "You are editing 24mp raw files, this is as fast as you can expect". I cancelled my Adobe sub 20min later.

As for your specific suggestions.

1. I have done this making sure I am using an NVME SSD scratch disk.

2. Not really unless using a complex filter or something, while just generally moving around, cloning, etc my GPU sits idle while usually one core is at 110%.

3. Can't unfortunately, I have an HP shire mobo and it doesn't allow for BIOS tuning.

4. I have benchmarked the SSD copying huge files and it maintains about 2.6gb/s through the entire transfer.

5. The ram usually sits at around 20gb when editing images. It maxes out while editing 4k video in Resolve, but runs fine. As does everything else. It is only Adobe software that runs like crap. Capture One also runs butter smooth.

Regardless though, even if the solution is some custom BIOS tune, that is an insane requirement to use the software. This hardware is excessive overkill to edit a 24mp raw file. I was editing 36mp D800 files on an iMac 7 years ago with better performance than I can get now. Meanwhile this machine can run most games on ultra without skipping a beat and other media apps by other companies (Capture One, DaVinci, etc) extremely well. The problem is Adobe's.

9700k, 32gb of 3600mhz ram, a GeForce 2070 RTX

Taht's the 2nd article on this in a couple of weeks. The best upgrade you can do with your money is probably not hardware, but to buy Capture One and never look back...

Unfortunately C1 isn't a substitute for PS IMO. For Lightroom, sure, particularly if you're not heavily invested in LR's catalogs.

True, and the LR catalogs are exactly my issue preventing me from upgrading (well besides the outrageous price).

Totally agree. Another BIG advantage with C1 is that you can store all its index files/catalogues on a windows share! Works great on NAS system.

Upgraded my i5 machine with 32GB DDR3 to Ryzen 7 3700X with 64GB ram, NVIDIA 1050 4GB and 2 superfast nVMEs. Paid 1000EUR for them and perhaps gained 10% (if even that) more speed in LR & PS. And my CPU runs way hotter with even bigger and more noisy cooler. Money wasted.

At least 4k video editing and encoding benefited from the upgrade but I do that so rarely that it doesn't really matter.

Haha the same happened to me! Worked fine for 2 days when the system was freshly installed, and after that it wall went down the crapper again. I can't believe that removing 5 dust spots makes my 9700K + 32 GB RAM + GTX 1060 slow down to such a crawl that even Spotify starts stuttering.

Thanks for the article.

One thing not mentioned which all our edit stations have are 10GigE network interfaces to a central Synology NAS system with lots of hard disks spinning in a RAID5 array serving data at 1GB/s... each PC has a single 512GB SSD for OS and PS scratch disk. Builtin backups and snapshots all happen on the NAS server.

Edit PCs have more CPU free as network IO is offloaded to the network cards.

Be careful with just RAID 5, particularly at larger sizes. I've seen a couple instances of the lengthy, I/O intensive rebuild killing off one of the remaining disks and subsequently ruining the array. Not a problem if you have backups, but just something to be aware of.

RAID5 / RAID6 or RAID10 are way better than a local SSD/HDD that can fail.

My point was not which exact RAID variant one uses but the advantages in general of a central NAS system.

A disappointing laptop followed by an upgrade of my workstation > great performance (all Windows 10). My photos are 46MP, raw, 14 bits depth. Here are my PC LR/PS experiences and insights.

Notebook: core i7 9K series with 16GB RAM, discrete NVIDIA GPU with 4GB video RAM and 1TB "drive" (4K OLED touchscreen) - Thunderbolt 3 to external storage. Running with hardware acceleration, I could not run Lightroom stable with default settings. Starting a web browser next to it, would kill the whole experience. Part of the problem is the video claims 8GB of system RAM under "shared memory" title. A call with Adobe helpdesk gave me a techy who told me to alter memory parameter settings. This improved the experience but removed chances of anything else. I needed a clean start and start nothing else.

The notebook now is earmarked for tethered shooting and marginal edits in the studio and on the road.

Workstation: My workstation had"died" in a failed BIOS upgrade two years ago and I then and there started an Odyssey to try to only use the notebook. I have not spent the 10 years, but so far can only report failure in that. A couple weeks ago I was fed up to the point I decided to revive the old workstation: new motherboard and CPU and decided to reuse everything else.

In came the ASUS ProArt Z490 motherboard (it has onboard Intel RAID that my old workstation used, as well as Thunderbolt 3, and comes with a 10Gbps Ethernet card) with a core i7 10700K. I reuse 32GB of 2,666MHz RAM (4x8) and a GTX 1080 Ti GPU with 11GB video RAM.

The main configuration runs on 3 RAID 1 sets of two S-ATA SSD and a 1TB scratch volume runs off 4 256GB S-ATA SSD in RAID 0 off a PCIe x1 card. The RAID 1 are on Intel RAID, the RAID 0 is a Windows RAID set.

I don't ever do computer games but the FutureMark Timespy benchmark gives a nice indication how fast your workstation is. A history of Timespy - config, score, better than %, date:

GTX1070--_Core i7--6850K_X99A_24GB+08GB 4,620 49% 20170605

GTX1080ti_Core i7--6850K_X99A_32GB+11GB 6,963 80% 20170626

GTX1080ti_Core i7-10700K_Z490_32GB+11GB 9,436 84% 20200726

These configurations all are without overclocking, with default hardware configuration. The 1080 scores are at 4K, where the 202 config runs 2 EIZO 60Hz 4K displays (fixed timings).

The Lightroom/Performance performance of the 10700K config is so fast I frequently am surprised still. Looking away because you are used to something taking time, only to find out it was already done in the blink of an eye.

I have not changed any LR/PS configuration setting here. Which points to problems in the Adobe software optimizing its settings by itself as per notebook experience. I do not appreciate lazy developers programming "scalable" * software and leaving the main scaling parameters to the user.

I also ran the Tomb Raider (Shadow) benchmark and this gives minimum 45fps with 100% GPU bottleneck. The 1080 does not have dedicated ray tracing acceleration and as long as LR/PS do not use that type of processing in their graphics code you will not benefit from newer RTX cards, IMO. As real ray tracing does not happen in regular video processing, this may also apply to video editing.

These numbers indicate that an older 1080Ti with its 11GB very fast video RAM still goes a long way in LR/PS acceleration - no need to upgrade. The 32GB RAM is a minimum in my opinion - it is comfortable and does not feel limited, but if/when I upgrade my significant other's workstation, I will move my 32GB RAM to hers and place 64GB in mine.

Overclocking? I bought the Intel K processor version that supports it. But will never use it. The CPU goes up to its turbo frequency incidentally - fine. You could try to play with different RAM speeds, but this is a difficult wizardry. Even running RAM at a higher than default XMP setting requires to ALSO adapt the CPU's memory controller (system agent) that has its own clock. If your motherboard does not include that in its clock AI, then good luck figuring it all out. And running a higher clock can actually degrade performance. My wife's 6850K workstation gave 1,000 more Timespy points at a lower RAM clock ... which has to do with misalignment in my system between the processor's clock, the system agent clock and the RAM clock and thus the introduction of more/longer waits.

Other reused components: Noctua N14 CPU cooler, Seasonic 750W 80PLUS Titanium PSU, Fractal Design Define R6 tower case.

The RAID 1 pairs are all Samsung "PRO" which are more expensive than their "EVO" siblings because the Pro version has better memory cells that can sustain a lot more rewrites per cell (after the first time a cell is filled, it needs to be wiped with an over-voltage to accept a new value and this wears cells out. Very fast. Read up on "TBW").

If you set your system up, you could distribute data and configuration over separate I/O channels. Consult your motherboard block diagram and "shared bandwidth" diagrams to see how your I/O channels run. All I/O devices on the South Bridge chip are on a switch in that chip, fighting for access to the CPU. In my setup the PCIe x1 expansion card (about 40 currencies) has direct CPU access through its dedicated PCIe lane that does not compete with anything else. And RAID 1 causes sequential use of the 4 SSD. Yes, your M.2 NVME can be on the South Bridge, competing with USB, TB3, S-ATA and a lot more.

Consider the steps in your workflow, e.g. in a flow chart drawing and note for each step where there's reading in your computer and where there's writing. Reads and writes may happen concurrently and thus will cause delays if they are on the same I/O channels. Spreading data across physically separate volumes can speed up a lot - read from source A and write to B, preferably with A and B on different I/O channels, not blocked by the South Bridge (Z490 in my system).

This also applies to the "page" file. I have tried to run Windows with no page file and it does not work out well. Forget it. If you have lots of RAM, that should be possible when all software had been written efficiently. Again, forget it. But, put the page file on an I/O device that does not compete with the rest. Note that the system volume is a half page file: binaries (executable code) is never "paged out" but just "forgotten" so if a binary code page is needed again, it is read from the page in the binary file on the system volume.

I'm considering another PCIe card for that page file ... but it needs to be x1 or else it cuts into lanes now used for the x16 graphics card (see shared bandwidth diagram in motherboard doc).

In other operating systems I could create volumes on separate disks that I mount on a folder path and thus keep operating system and application binaries separate, or even application data separate. Mounting in Windows is not reliable and a pain in the rear end. It works for my e-mail database on a separate RAID 1 volume, but not for data that may be needed early in the system start up.

Windows 10 ed. 2004 (=2020H1). I had to rebuild the system with W10 (64 bits, Pro) and for a new install only had access to a 2004 version with lots of noise in the mass media about bugs and issues. I made sure to have the latest drivers available at install time and injected those in the early installation process of Windows. The system made a stable and robust impression from the start. There were two "blue screens" and a dump file inspection pointed to the NVIDIA driver. Well, that driver got an update two days later and since that moment, the system is extremely stable.

Note my Timespy and other benchmarks were run with the NVIDIA "Studio Driver" - as nowadays NVIDIA have two versions: the game optimized versus the studio driver where the game driver gives a bit more speed at the expense of stability.

One Windows 10 detail. Windows 10 has this fast reboot trick that it switches ON by default. If you are sadistic to your masochist self then leave that as is. SWITCH FAST REBOOT OFF. Updates cannot properly handle this and without fast reboot the boot up time is fast enough anyway. So, the first moment in installation you have logged into Windows, switch it off. Before installing anything else. Also, figure out "Windows Restore Points". Google these things.

W10 Home versus Pro. Both versions are in the same installation binary. Your license code activates one or the other. Always use "Pro". It enables you to make additional local accounts with limited rights. Always work in the limited rights "unless". Use one limited account to write your RAW files to your NAS device and another with read-only access to start importing into LR, culling, base edits, renaming ans saving to another volume where the base edits live, etc. By using authorization this way you prevent a raw original to get lost or damaged by human error. It also helps to keep the work of different shooters apart and identifiable. Plus it cuts up the workflow in sections that you could have done by different support people in your post office.

Note that putting all edit data in .XMP sidecar files means those edits can be easily reused when you build a catalogue from scratch.

As to "limited rights" accounts in W10 Pro versus Home: in "Home" start Windows Disk Manager. It starts and asks if it is allowed to run under "Administrative Privileges" (meaning it runs as a super-user). Just click "Yes" and it proceeds. Now start Disk Manager in "Pro" from inside a restricted account. It loads and reports you are not a super user. It shows nothing and you can only cancel. So if you need to do Disk Management, you have to lock or sign out from the restricted account and log in to your system administrator account.

Data integrity. Read up on "spontaneous bitflips" before you proceed. Yes, these are a thing. RAID 0 has no redundancy, hence the 0 and protects nothing, just can make faster. RAID 1 has redundancy but only protects against the loss of a drive and does not protect the data. RAID 5 however has an innate support for data integrity protection because it stores the data once as data and another time as properties of your data. This enables "bit scrubbing" - a process that scans your data and repairs bitflips. Do you need this?

Well, yes. By the way, the Intel RAID controller runs a bit scrubbing check on RAID 1 volumes too and is able to repair inconsistencies depending on which they are and what context avails. Lousy Windows and other App updates may prevent your system from shutting down properly and you have to use the power button to force shutdown. This happened a few times and in 25% of cases, the next Intel RAID scan reported one data error that had been fixed. Without the RAID controller this remains invisible and unrepaired. Or, put your important work on RAID 5, at least the backup thereof.

*) Scalable software. Old software engineering would program for a fixed program setup - not "scalable". So a transaction program could be coded to support two users, or one or four. It had a fixed memory and CPU load. From a capacity management point of view this is very transparent. If you went from 2 transaction users to 4, you needed to be able to run two program instances at the same time.

Scalable programs can increase the amount of memory they use in order to support more of something they do, e.g. when the demand happens. The risk of these is that these programs can continue to grow in their use of memory and CPU when developers/programmers do not place limits in their code that could depend on the configuration the program is on. The second risk is that a programmer writes code to grow resource use, but forgets to scale down when opportune and this is called a memory leak. Altogether this makes capacity management with scalable programs way more difficult as q.e.d-ed by Adobe with LR and PS.

I just recently switched from an overclocked i9 9900k desktop with 64gb of ram to a 16" MacBook Pro and it was a huge difference. I don't know if it's some sort of Apple magic or just Adobe optimizing for macs but this machine does circles around the 9900k in both lightroom and photoshop despite being worse in basically any other benchmark. We've now internally all talked about getting macs despite being a group of die hard PC gamers because it made that huge of a dent in my workflow while also losing all of the bugs that lightroom on windows had.

Interesting. I had worse performance on a highly specced MBP a few years ago that I basically stopped trying to edit on the road, instead just deferring it to my computer. What kind of camera's files are you running through it?

We shoot 30mp raw files on a Canon EOS R and usually HDR them in lightroom. Performance is still noticeably better even when I bring out our old 6D with 20mp raw files.

Good to know. Looking at the comments, it seems like there's no consistency between OS/specs/file types as to performance.

With overclocking recent processors, cooling is super important. Especially with that chip... Not enough cooling = throttled CPU.

I just built a computer with a i9 10900, the cooler is a Noctua D14 - There's nothing I can do to get the CPU temp above 60C. The result is that the turbo boost speed stays near the all-core max.

I have the i9 9900k running on a Corsair H100i liquid cooler. With all 8 cores locked at 5.1Ghz it only gets up to 71C. Generally won't even get that high unless I hit it with a synthetic benchmark or 3D render.

The apple magic I am familiar with is in XP days Apple could use >4GB of ram in x32 OSX. That made all the difference! Now 15 years later its the opposite as most machines still only have 8GB of RAM. Your macbook may be running faster because the OS is still fresh. Only way to render faster videos is Linux

Both the i9 system and my Macbook Pro have 64Gb of ram, granted the ram on my Macbook was $800 since they solder it in. I've reinstalled Windows on the desktop periodically and it helps performance but it doesn't even come close.

I've also hackintoshed that desktop which was my first clue about OS performance. Despite a few bugs relating to updates, that same desktop with Macos was way faster and lost all of the lightroom specific bugs I had on Windows 10. That system kept having problems which is why I went and bought this overpriced laptop.

Linux would probably be the best choice if we could ever run adobe stuff on it. Linux is still my favorite OS by far but I can't get any work done on it. Although with how well gaming on Linux has progressed, maybe someone will get Adobe Software working on Linux.

I got the same specs as Ryan and it flies for me. I can flip through about 5-6 photos per second in the grid mode for culling and when I turn the dials on my midi controller for Develop module edits it is pretty much instantaneous. Working with 60 megapixel images from the a7rIV. Not sure what it is that makes everyone's experience so different. 200gb cache?

9700k, 32gb of 3600mhz ram, a GeForce 2070 RTX

I use a 5k mac and a 100mpx Hasselblad Hy6 for 27 x 100mpx landscape stitch in LR with ease - what is the problem again?

Years and years of same mantra how to "Actually Improve Lightroom and Photoshop Performance" - well, the best speed improvement to my workflow was moving away from Lightroom. When Adobe suggested dual core, I had 6 cores, when they suggested 16 GB RAM, I had 32, 4-channel, when they proposed to dedicate 12 or more GB of cache I dedicate whole separate SSD (120GB) for cache, had images on separate SSD, had system on yet another SSD, had top level GPU, ... well, thhe crappy old skeleton worked as good as on years old laptop with 8GB RAM and one SSD and 2-core processor.

Lightroom is ancient software that is internally 32-bit and it can properly address only ONE processor and only 3.5 GB of RAM. That is valid for everything regarded brushes and other internal corrections, like perspective, etc. While on outside thy modernised it and importing, exporting and rendering previews was flying on that highend machine. But hey: I rather go to lounch for import and than edit smoothly that vice versa.

GPU acceleration helps with handling image (zoom, pan, move), but have detrimental effect on brushes. I was constantly turning it off/on. More cores = bad in Lr. One highly tuned (overclocked core) was better. I imported stuff on high end machine, than edited on sporty overclocked 2-core i5, than exported again on 6-core. Or I just deactivated few cores in task manager. It helped.

But all tis hassle was ended when I accepted defeat and started to use CaptureOne. Compared with Lr (calculated on few projects) I save fortnight of work time per year just for smoother faster work. Adobe can pay me to use Lightroom and I'm done with it. I need to open it here and there to search old database, but that is already a pain. If I thought how much I paid to Adobe and they so miss treat us...

You are right. The time wasted trying to figure out LR is maybe best to be invested in the C1 learning curve. Oh god I just spent 2 hours trying to figure out what went wrong with my LR re-instal on a new SSD, just to figure out that there was nothing wrong. Even 6 months after the release of the 1DX3, Adobe has not yet provided the Neutral Camera Profile!

After 10 years of buying upgrades Premiere Pro, Lr, etc Adobe did not fixed stuff but always added something else. Starting with CC the story worsened. They started to change stuff for the sake of change to disguise new version is still the old version with all the problems but in new rounded GUI. I'm done with Adobe. Paying endlessly for same old software. C1 on the other hand communicate very closely to audience.

Lightroom scales to 4 cores very well. I agree 8 cores is an embarrassment. whats this about x32 software? LR and PS have been 64 bit for ages

My personal experience with LR was so-so until I moved to an i9 10900 (10 cores, 20 threads). First thing I did was look at core usage when building 1:1 previews - 20 threads at 100%... I wonder why your experience was so different.

I've got a similar experience with 16 threads, where previews and exporting parallelized well.

Jon, read me carefully. IT any render task, all cores are used. That part is fine. Editing part is not, though.

David, core of Lightroom is 32-but. On 6/12 core CPU with 32GB 4-channel RAM, NVMe SSD's Ligtrom is staling when feautures are used that can't adress more ram than 32-bit. It's obvious when I export, all cores are used and some 20GB of ram is sucked and render is outstandingly fast, no slower than from CaptureOne. But when I use perspective or some masking brush, than performance is same as on 2010 laptop with 8GB ram and 2/2 core. CPU drops to 16% utilisation and RAM is used at 2.5 GB. It does NOT scale, to more than one core for crucial features, since even Adobe confirmed when I was in grou that were testing new "hacks" for better performance in multicore machines. Hack was to exclude utilising more than few cores and it is incorporated into new builds. One can just "disable some cores in task manages" and get same result. When using Capture1 CPU goes mad when using similar features and work is fluid. Lightroom stalls at 16% of CPU usage. If this is 2-core laptop, it goes to 50% and than stalls. If I locked all cores but one, it goes to 95% cpu utilisation. Than I tested on highly overclocked 2-core machine, with clock speed well over my 6/12 core main machine and Lr got wings. It acts like 32-bit internally and performs like it. It needs one high speed core not bunch of low clocked cores. But import/export/previews, the opposite is true. Who paid attention to Adobe products, they also said this years ago when CS4 was released, that they now made concurrent threads being executed to make more than one core and more than 3GB ram to be used in parallel. Since SAME bugs and problems are still here held from version ONE, and previously mentioned behaviour, we can surely say they never rebuild Lr from day one, and scaling is still achieved with running multiple 32-bits threads internally - WHEN/WHERE POSSIBLE. It works fine with multiple images being rendered. But when ONE image is edited... no go. They rather flock that horse, lower the price a bit and add new "candy" features and try to attract noob users that will never experience this since they not know better and never will, since they have more their own shortcomings than Lr itself, have probaly low spes machine (so Adobe can always say you need better machine and by the time they will have it, Adobe can say same again). Proof: Adobe have 10's of millions subscribers and that is majority of noob users that does not care. Few professionals left? Who cares. Consumer features added, one click features added, real bugs not addressed. Why, if noob user will never know the difference. It's stupid for me to still use it sicne I can see the pattern, that since VersionOne Performance is the same, but files are now 10 times bigger and my demand to complete job is 5-times greater. One will say new Lr is optimised and now work better. It does. It works 20% better, ... it's like upgrading from Pentium-4 3.0 to Pentium-4 Extreme 3.73 Ghz in 2021 when weakest processor have 8-times the power than those.

Thank you for sharing this. My 2015 machine feels fast enough for all things and felt my LR performance was limited by software alone. For video, I want to pull my hair out. -- You confirmed what I always thought. I wonder if the LR, not classic works better. Was it built from scratch? Maybe.

I don't understand why people stress so much on export speed. After an edit session, I need a break from my computer. It can take an hour if it likes. It's the actual culling and standard edits you mention that have always felt slow.

What's your experience having preview and library files on an SSD vs separate scratch HDD. I'm just so desperate for more speed I don't want to move them to something 'slower'.

It depends on what you are doing, I don't see more than 4 threads get used during most of my editing but it will use all 16 threads for exporting or making previews. I've also seen Lightroom use upwards of 40Gb of ram on my systems so its definitely 64-bit underneath.

I've been on an upgrade spree over the past few years since my photography business has been growing and I've needed to be better about how long I spend editing. We've had it on atleast 10 systems over the past 3 years and I have a few take aways.

-During editing, you probably only need 4-6 threads, the faster each thread the better. Extra threads are nice if you want to have anything running in the background.

-During export, it seems to scale to as many threads as you have. I've seen it use up to 32 cores.

-Lightroom's minimum ram spec is way too low. It gets noticeably better as you add more ram up until about 64Gb, atleast for my workflow.

-GPU accel is really bursty and even though it never shows high usage, it seems to get better with higher end GPUs.

-Lightroom is night and day better on Macos. The first time I tried it, I hackintoshed that i9 system just for fun and despite a few bugs, it ran multiple times faster than it did with the same hardware on windows. The bugs ended up being a deal breaker for me so I got a real mac and despite the disparity in hardware, the Macbook Pro sill came out on top in terms of Lightroom performance. For reference, the Macbook Pro gets a Cinebench R20 score of 3399 compared to the 4496 of the i9 9900k desktop.

Despite all that though, I wouldn't really say any of my systems has good Lightroom performance. The Macbook Pro is the best of the bunch but it will still hiccup every so often and will sometimes lag on brushes when zoomed in at 1:1. I've been meaning to give CaptureOne a shot but my workflow is heavily tied to Lightroom and I have people under me that I would have to completely retrain if we switched.

Google "slow lightroom" and it comes up with about 4,580,000 results. Seems Adobe really doesn't give a sh*t cause Lightroom has been slow for over a decade

If I google "fast lightroom" I get 14,000,000 results. Your point that Adobe doesn't care is accurate. But generally speaking, google search result count is never really evidence of anything.

But you opened some "fast lightroom" links? They are "how to make lightroom fast" :)