Artificial intelligence is bringing incredible advances for photographers, from relighting portraits and making people smile, to cleaning up skin and swapping out skies. However, photographers everywhere would beg developers of photo-editing software to harness deep learning to create one simple tool.

Mirrorless cameras have meant some significant technological leaps for photographers: live histograms, smart autofocus, and silent shooting, to name but a few. One disadvantage, however, is that without that handy mirror hiding your sensor every time you change the lens, you tend to collect dust, not helped by the fact that the static charge on the sensor itself acts as a magnet for small particles. A few manufacturers hide the sensor with the mechanical shutter when the camera is powered off, but once the mechanical shutter becomes completely obsolete (three years? Five years?), this problem is going to return. Shooting in dusty environments can be a nightmare.

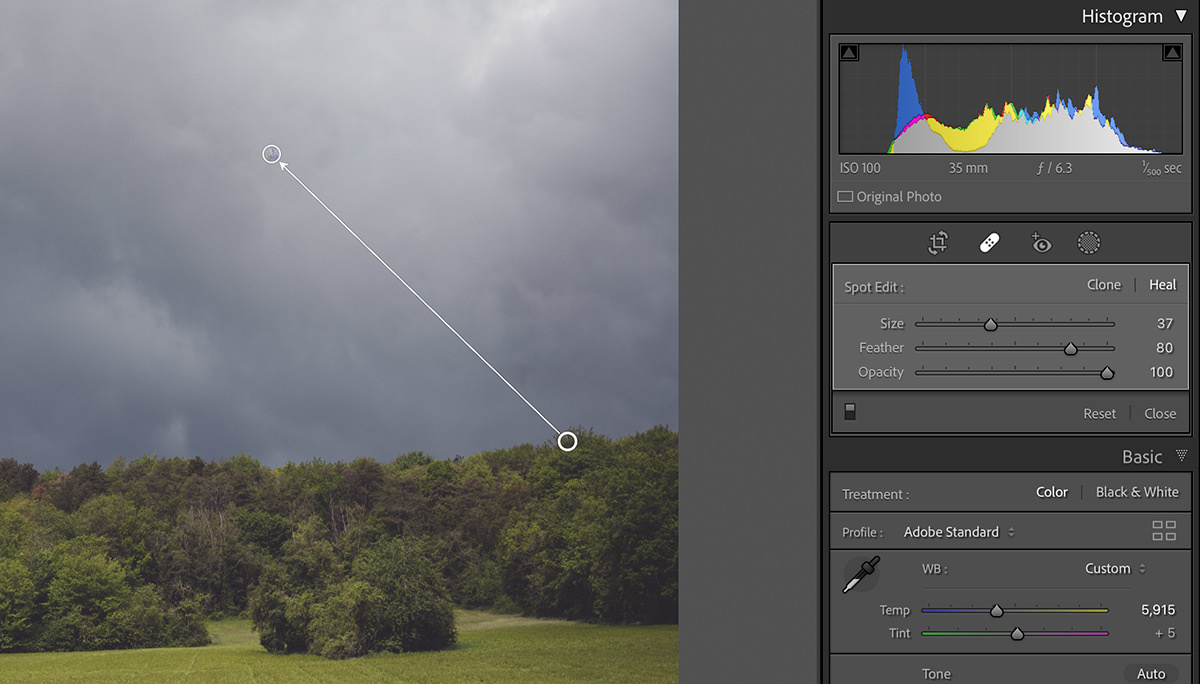

Every photographer’s bane is sifting through countless raw files and removing spots of dust. The healing tool in Lightroom can be painfully slow and if you’re editing raw files from a relatively new camera boasting upwards of 30 megapixels on a machine that’s more than a couple of years old. Click. Wait. Stare in disbelief at the inexplicable choice Lightroom has made when choosing where to sample from. Show pins. Drag the source pin to somewhere less insane. Wait. Click. Wait. Repeat.

No, Lightroom. Just no.

To deepen your frustration, Lightroom has a handy feature that can help to reveal dust spots but this just emphasizes the struggle. “Here are your dust spots,” Lightroom declares gleefully. “Have fun with those. Hope you don’t have anything important to do.”

What mystifies me is that this is a universal problem that has plagued us since the very first digital camera with an interchangeable lens, and yet, engineers seem more interested in giving someone an unsettlingly weird smile or letting landscape photographers awkwardly fake golden hour than solving a simple problem. My disclaimer: I know very little about artificial intelligence, but I feel confident in saying that if a machine can be taught to identify someone’s lips, nose, and eyes, a dust spot should not present too much of a challenge. And if we can swap an entire sky, patching tiny blobs across a batch of images doesn’t seem like a big request.

Thank you, Photoshop. Very useful.

Here’s my dream: I get home from a day’s shooting in the mountains and import some images into Lightroom. As part of the import process, I click a box that says “detect and remove dust,” and as Lightroom copies and processes the files, it finds every speck of dust and eliminates them using the healing tool. If it’s not sure, it flags the images and asks me to approve or tweak its changes. Sorted.

As I pondered my dream, I stumbled upon one of the features touted by Skylum for the forthcoming Luminar Neo, its new photo manipulation software that’s due to launch, well, soon, appeared. My prayers may have been answered, but if you’ve ever wondered if Luminar software is suitable for serious photographers (whatever they might be), the promo video from Skylum might offer you an answer:

I’m not about to ditch Lightroom, not because I think Lightroom is the best, but because I’m too lazy to change, both in terms of moving my catalog and the long process of learning to use new software. Given that Luminar has been keeping Adobe on its toes in the last year or so, I’m hoping that automatic dust removal might come to Lightroom shortly. Failing that, I’m wondering if DxO PureRAW might soon gain such a feature. Like a few other non-Adobe brands, its use of deep learning is innovative, and if Adobe isn’t keen to add this technology anytime soon, paying a one-off fee for software that pre-processes your raw files ahead of editing in Lightroom could be a nice solution.

Should developers have given us AI-powered dust removal long ago? Who will be the first to offer this feature and how much would you be willing to pay for it? Let me know in the comments below.

The Z9 would suggest the mechanical shutter is obsolete. It just has to filter down.

Re dust removal, spotting film is nasty.

Bravo for this.

However, in all seriousness, I think reliably identifying dust spots probably isn't that easy. And it's no good if the software finds 8 out of 10 and leaves 2 that are really noticeable to human eyes.

Actually reminds me of the old "Sony star eater" issue of yesteryear ;-)

But it's a lot easier to clean 2 noticeable spots than lots of less noticeable ones.

My tip is to avoid dust on your sensor. I look at my sensor before each shoot and there MAY be one piece of dust on it. I blow it off with a hand blower that has a filter (so you are not blowing new dust onto the sensor). Plus, I keep my camera turned down when changing lenses (so dust doesn't fall onto the sensor) and ALWAYS have the body cap on when there is no lens attached. I really cannot believe the people in videos who complain about dust issues when they are leaving the body cap off of the camera! And one more thing, I run the camera's sensor cleaning every time I put a lens on the camera. I have to deal with dust on my photos once or twice a year. This usually happens when changing lenses in a windy environment with no time to run the camera's sensor cleaning.

That is it how you should do it. You can easily detect spots like this: Put a lens on it, set the aperture to f/16 or smaller, point the camera to the sky, turn the AF off and set the focus off so that the sky would be blurry. The small aperture will "sharpen" the dust spots and make them visible.

I rarely change lenses in the field. The new mirrorless bodies are so light that I can carry 3 bodies with 3 lenses in my back pack. I'm fortunate that I can afford this but I always keep the older body when I get the latest body. (You only get 30% of the value of your older body anyway.) The latest body goes with the lens I use the most. The oldest body goes with the lens I use the least. One of the bodies is often a D850 when I'm shooting wildlife.

Thanks for the ad post. In all seriousness, how Do I completely block this sites shitty content from popping up on my feeds.

1. By not visiting. Read up on how ads work.

2. If you are on a PC, read up on modifying the "hosts" file. I don't know if Macs have an equivalent.

Happy to help!

--Uncle Ed

You created an account for the sole purpose of asking the Fstoppers staff how to use the block function on an unspecified third party feed.

Its amazing in its stupidity, by visiting the site and creating an account they have done nothing more than guarantee increased traffic to their 'feeds'

I'm working so hard to be nicer to people that I deleted the really harsh part of my comment.

Humans...

We don't want to encourage "articles" that are really masked advertisements, do we? So I suggest that if anyone wants to buy this software product, they open a fresh window on their browser and search for the product via Google, instead of clicking on a link in the "article".

Some of you need to stop whining and overreacting to articles that mention or have links to the software.

1. That's what these forums and sites are for. Discussion and discovery.

2. If you were smart as you are overzealous in anti-ad, you'd notice the links are directly to the software. They are not even affiliate links.

-- Luminar Neo points to https://skylum.com/luminar-neo

-- DxO PureRaw points to https://www.dxo.com/dxo-pureraw/

Even if they were affiliate links, who cares. How do you think they pay for this site that you visit on regular basis?

Spot removal is the single most aggravating issue in LrC. It is way more time consuming than any other operation. And the sources it selects to fix spots from is often bizarre. I use a Pentax K3 which is quite tight and immune to dust. My spots are the result of 1:1 closeup macro photos of 60-year-old slides that had dust spots that were extremely difficult to remove prior to photographing. Removing spots often yields a "not responding" message from LrC while it is thinking about proceeding. Complete PITA. I too would truly appreciate an AI solution during import. 100% removal is unnecessary, but 50-75% would help!

Ad post or not, having a dust removing tools would be nice. However, I also don't think an AI would identify dust perfectly and you would still have to do some handwork (and fixing AI mistakes is sometimes even more difficult than doing everything on your own from the start). So its either cleaning the sensor or applying some grain effect via Photoworks for now.

Here's a feature that's pretty hard to find in most photo editing softwares: make a mask and re-use that mask in several different layers and groups, *without* copy/pasting it (so you can modify the mask later and have all those layers reflect the new mask). Or what about a frequency separation function that doesn't require you to bake all the previous layers together first, so you can make changes under your frequency separated layers and have that propagate up.

With 'darktable' you can copy the edits of a module and apply it to any other image you like and then of course alter the single edited spots. And about the second part: This is the normal behaviour of 'darktable'. The same as said before is valid for all modules/functions.

I think you misunderstand my first complaint. In Photoshop, you might be compositing several layers or adjustments together into a single image, but need to recycle the same mask in all these layers. Nowadays, you will often have to copy and paste your mask several times in order to do this, but in an ideal world you would be able to "link" your mask to all the relevant places where you want to use it, so that there's only one place you'd have to go if you wanted to adjust the mask.

I wasn't aware that DarkTable had frequency separation controls, but if that's how it works there, that's pretty cool.

Ah, I see. Yes, that would be a useful function. Thanks for elaborating. If you have the time: What you think, does that meet it?

https://docs.darktable.org/usermanual/3.6/darkroom/masking-and-blending/...

See chapter: "reusing shapes" (shapes = masks)

I think one of his points is that it is not present in MOST software, including Adobe which he uses. Suggesting darktable, which is great for what it is, isn't a solution. I can't use darktable but my work and workflow require the Adobe suite and I can edit photos significantly more quickly with more precision than any other software I've used. Most other software is Lightroom alternatives, which I don't use, and only Adobe allows Photoshop integration with Bridge, Premiere, Affect Effects, etc.

So I think he (and I) is wanting this feature in MORE software, including Adobe.

Based on what it says in that chapter, the "add existing shape" option in DarkTable appears to do what I had wanted. That combined with the "Mask Manager" seems like a pretty interesting set of tools for what's basically a raw convertor. I'll give DarkTable a pass then :)

To change the topic slightly, the heart of my original comment was that most photo editing software presents adjustments as "Layers" which can be combined as "Groups" and they can be stacked over each other. For video compositing, you'll often instead find a "node graph" paradigm, where any acyclic graph structure is allowed.

From a graph theory perspective, the graph method (a DAG) is more expressive than the layer method (which is basically just a tree). By "more expressive", I mean that you can make the same images either way, but often times you'll have to do a lot more copy/pasting with the layer method. If your client wants you to change something later, that could potentially require you to rebuild a lot of the stuff you copy/pasted or rendered out. Watch any After Effects or Photoshop compositing tutorial and take a drink every time several layers get rasterized, flattened, or "precomposed" and every time something is copy/pasted so it can be re-used and you might die before the end of the video.

Many thanks for the answer, Thatcher and thanks to Matt too. I think you are going to like the scene-referred workflow of 'darktable'. Cheers!

As for the jpg+raw, after importing, wouldn't you just filter to show the file type you want...in your case, the jpg's? The raws are still there in the catalog, just not visible until you need them.

As for a photo editing software that produces the exact same jpgs as Fuji's in camera engine, wouldn't you just use Fuji's software? You can edit and export jpgs from the raws.

My best way to keep from annoying dust spots is to never to Sony. I hear people cleaning their sensors sometimes twice a week (Tony Northrop). If all the Sony users are having this amount of dust dirt spots it’s not just having something come down over the sensor, it is something more than that.

I use Sony and don't have the problem Tony has. I've had my a7rii since 2015 and a7iii 2019 and never cleaned the sensors. I just use the built-in sensor cleaner and a rocket blower. With that said, I don't change or mount lenses when out on the field. They are already mounted when I leave the house.

I use two Sony cameras on a regular basis (several times a week) and only have dust issues once or twice a year. As I wrote in another comment, I take simple steps to prevent dust problems.

How about cleaning the camera sensor with a blower every time you change lens. I dont' use a fancy or expensive blower, just one of those nose suction thingies that babies use. But every time I change lenses, I clean both the camera and the inside of the lens that I'm going to use. And I don't change lenses in the field unless absolutely necessary.

Well for events/sports photographers who change lenses all the time doing this would be nearly impossible. Time wise and also we usually do not carry a blower in the pocket and have 6 hands to hold lenses, camera and a blower.

For someone shooting in studio or landscape it probably can be done but on location (especially on a windy beach or similar) having a camera open and blowing the sensor is not an option at all.

There is no need to tell us you are lazy. We already know that when someone says loud and clear that they don´t want to work on their images. There are companies that have a button that very well could be tagged "Edit Artistically", so you can run to them and hug tightly, but probably you´ll wave to the distance, because you are lazy. If you don´t value your work enough as for doing the necessary things yourself, with a particular vision for your image, and in the particular case, probably it´s not the software that´s failing.

[list of unverifiable photographic achievements in 3, 2, 1...]

Holy mackerel, all this rant because the guy wants an auto dust spot cleaner. smh

Here's something about AI - each and every engine has specific deep learning capabilities. What's intuitively obvious to us - people, dogs, cats and giraffes have faces (for example) - is NOT obvious to a deep learning engine. THAT BEING SAID - sensor dust is but one kind of "lens spot": dust on the lens, lens scratches, fungus defects - all have different characteristics than sensor dust. Scratches from digitized film negatives show up as as light spots not dark ones. So while the new Skylum software may have an awesome feature to remove sensor dust spots, I wouldn't count on it being able to handle other kinds of defects. At least not in the initial release.

I think Adobe is just being cheap. I bet if they threw another two million man hours of programming and code-writing into dust removal, they could get Lightroom to do it well. Of course it'd cost a few hundred million dollars, but I am sure it could be done, and done very well, if they invested the required amount of programming into it.

Oh come on! Using the heal tool to dab all your dust spots is fun.

Should have bought an Olympus!

I've had 5 Olympus cameras over the last number of years and have yet to need to clean a sensor. I'm pretty careful about changing lenses, but it is remarkable. I also have a Nikon D850 which stays pretty clean, but I've had to clean that one more.

And the dust spots also drive me crazy. I like to shoot my 14mm full frame at f/22, but it's just too much work to fix all the spots on the lens/sensor.

Same. Never had to clean an Olympus sensor, ever. And Olympus M4/3 is one of my primary systems.

it's not perfect but in LRc if you do healing brush spot removal on one image you can paste just the spot removal to all the other pics from a shoot. they probably all have the same dust spot location on the sensor. you might have to adjust some of the source repair circles but for dust in skies it works well and can save a lot of time. of course you need to copy and paste the position from the same rotation aspect shots, ie horizontal to horizontal, vert to vert shots.

About the shutter and the closed shutter on, do not forget there is lube on the moving parts. You can wet clean the sensor or use the sticky pen (orange for sony and blue for Canon/Nikon) in a clean air bathroom with a mask on. Then place in a plastic bag when changing a lenses. One most important thing to always know is any lens is sharpest two stops above wide open, with most lenses f/4 or wider you should rarely above f/8. You will have the most dust when at f/11 and higher. And also a clean lens back is also a must. You can do all of this and not use for a month and deep eye the sensor and there will be dust. Ever look at your brand new camera fresh out of the box, it needs a sensor clean first as well as a new lens.

Lr's dust removal tool is and was the best thing to come along.

A long time dust fighter, how often to you even clean the outside of your camera and your camera bag also (I bet a few leaves and twigs let alone dirt or sand or pollen.

I use a Fujifilm X100 and have no idea what you're talking about.

I'm not sure about an AI dust spot remover as that may be trying to run before finding out if you can walk.

A 2 stage process might help, with an AI dust spot identifier stage running first. That way you'd get a chance to proof read the suggestions. Save them, then have the choice of manual healing or AI healing.

So far my images haven't been troubled with dust spots (Olympus M4/3rds now, Canon 7d mk2 previously), but some AI would help in getting rid of distant birds (Crows, Rooks, Ravens and Gulls) from landscape shots, where the birds resemble slightly large dead pixels.

I can't even see the birds through the lower than sensor resolution evf or even the optical view finder, but they are visible when the 20mp images are viewed at 100%, and do show up on A3 prints.

When you see marketing people claiming their amazing new product uses "artificial intelligence" to identify dust specks, you get an idea of where so-call AI is really at, after more than 50 years of research.

This year an autonomous system (Turkish designed, built, and operated UAV) was used for the first time to kill someone totally absent of human command..

We've had "heat-seeking missiles" for 70 years.

It's all just sensors and pattern recognition.

Imagine going back to the 80s and telling AI researchers that in 40 years, computers would be able to recognize faces on a photo, based on distance measurements, and that's the high point so far. They'd cry.

Heat seeking missiles are a different class of weapon to something like an autonomous loitering munition.

You may view it as meaningless, but the use of such biometric surveillance systems by nation states (the CCP being particularly notable) is anything but.

Then we have things such as proteins and medications being modelled and developed.

Edit: there's some awesome stuff being done in aerospace design.

Awesome, and potentially very dangerous. Possibly already in the service of evil.

I just take issue with the use of the term "intelligence" every time software does something new that used to seem difficult, like protein folding or speech recognition.

It's very early days, and this stuff is moving incredibly quickly.

It is an error to assume human intelligence and machine intelligence are the same, or even similar.

In any case, morality is utterly irrelivant, this is the world we are in.

Tbf machine learning models for images really only took off in about 2012 with AlexNet. Before then, a lot of these larger models wouldn't be viable because there wasn't enough training data, the hardware was too slow, and the backpropagation (IE the learning stage) algorithms weren't really implemented in an efficient way.