Social media has the most confusing and arbitrary policies on what constitutes adult content. If you don’t want to have your account deleted for posting fully clothed people, you need to understand these rules.

Nudity policies on social media are actually very important. They keep platforms like Instagram from turning into sites like Model Mayhem. However, those policies are only effective when they are clear and applied with consistency. Here are some examples of images that I have recently tried to use in paid promotions on Instagram and that have been rejected for violating Instagram’s community guidelines on nudity and sexual activity to illustrate the confusing nature of these policies:

Instagram’s Nudity Policy Only Allows a Visible Anus if It Is Photoshopped on a Public Figure

When my images were rejected, I was given a link to review the community guidelines so that I could understand why my content was not allowed. Under Instagram’s policy for nudity and sexual activity, it has some content that it does not allow under any circumstances, and other content that is age-restricted. The policy goes on to describe how they define nudity and how they define sexual activity. The definition of nudity includes the following confusing clause:

From a grammatical standpoint, it’s not clear if the “Photoshopped on a public figure” part pertains to the visible anus and the fully nude close-ups of buttocks, or just the fully nude close-up of buttocks. The other problem is that it’s not clear what that sentence even means. Does that mean that we can post a fully nude close-up shot only if it is a public figure and only if we liquify or use frequency separation or put a gradient map on it? Or does that mean that we can Photoshop fully nude close-up buttocks onto a public figure? If it is a zoomed-in fully nude close-up buttocks Photoshopped on a public figure, how would they know that it is the buttocks of a public figure? Instagram’s help page says that it uses the blue verified badges to identify public figures. Why is the community a safer place if it can only see the visible anuses and fully nude close-up buttocks of people with blue checkmarks? How does this make any sense?

Instagram Will Allow, With an Age Restriction, Any Sexual Activity if It’s Funny

The Nudity and Sexual Activity Policy further describes content that it will allow, but it will be restricted to adults. That clause includes the following language:

Under this policy, users are allowed to post content that meets the definition of sexual activity (defined as “Explicit sexual intercourse, defined as mouth or genitals entering or in contact with another person's genitals or anus, where at least one person's genitals are nude.”) if there is a funny context. Again, we are not given very much explanation on how to invoke this example or what it means, but under Instagram’s own guidelines, you can post actual hardcore pornography if it is funny or there is some kind of funny backstory.

Blue checkmark people can only show fully nude close-up buttocks, but hardcore porn can be shown by anyone if it's funny hardcore porn

YouTube’s Nudity Policy Prohibits Fully Clothed Buttocks If the Fully Clothed Buttocks are Sexy

Here is the image that I recently had taken down from YouTube. Granted, it is slightly more provocative than the Instagram images, but it is still a fully-clothed woman cropped just around her head, just standing and staring into the camera.

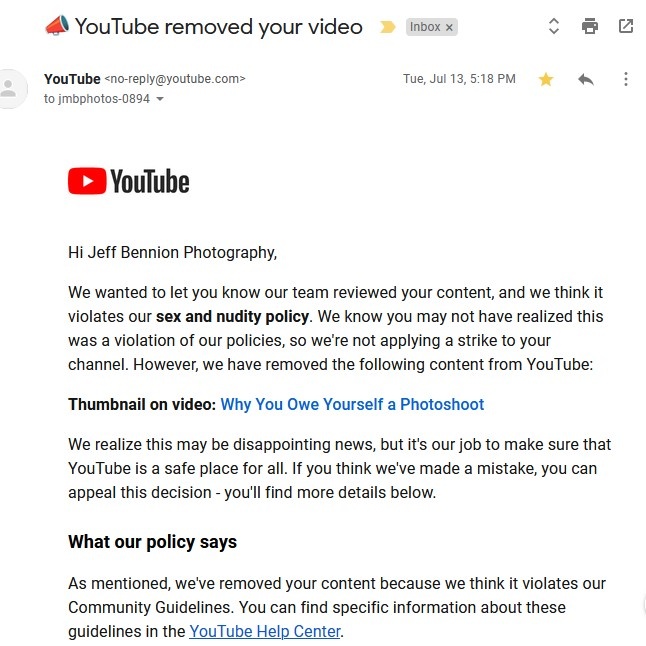

This was the planned thumbnail of a video

Here is what YouTube said:

Again, I was given a link to review the guidelines on nudity and sexual activity. YouTube offers the following guidelines to creators on nudity and sexual activity:

As with Instagram, YouTube’s confusing grammar makes it unclear if creators are not allowed to post depictions of clothed genitals, breasts, or buttocks, or if the clothed/unclothed clarification only pertains to the buttocks. Regardless, there is either an algorithm or a Google employee in charge of determining whether YouTube videos contain fully clothed buttocks that are for the purpose of sexual gratification to send out emails like the one above.

YouTube Does Not Allow Videos of Kissing that Could Lead to Intercourse or Eating for Sexual Gratification

Google also provides a list of more examples of things that are not allowed, including: 1) kissing in a way that invites sexual activity, or 2) everyday scenarios such as getting injections or eating for the purpose of sexual gratification. I'm not sure what it means to eat for the purpose of sexual gratification, except for maybe eating Velveeta in bed with your wife in a hotel?

The Confusing and Arbitrary Nature of the Rules Is a Problem for Creators

Instagram uses an algorithm for determining which of your followers get to see your content and whether people who don't follow you can see your content with hashtags or on the explore page. In a recent interview, head of Instagram Adam Mosseri, wrote a blog post entitled "Shedding More Light on How Instagram Works." In his post, he touched on shadowbanning and how the algorithms work. Or are supposed to work:

The "Recommendation Guidelines" referenced in the last sentence can be found on this page. It states:

So, when you post content and it is determined that it violates the poorly-drafted and confusing community guidelines described above, there is a period of time that Instagram hides your content from the explore page and from showing up for people who follow your hashtags. The result is a complete lack of certainty of whether your account will be punished and demoted for any type of content that contains women if the woman is wearing jeans that fully cover her buttocks but her buttocks is for sexual gratification. Or if you post a video of a woman kissing in a way that could later lead to sex. Or if you post a hardcore pornography scene that is not funny enough to meet the guidelines. Or if you post fully nude close-up buttocks of a celebrity, but you edit it in Luminar instead of Photoshop.

The rules make no sense. As Adam Mosseri explained, they don't even have a system in place right now to let you know what you did to have your content not recommended to other accounts. After many years, they are finally working on letting people know when they break a rule and are secretly punished with an unexplained drop in engagement and reach for an undetermined amount of time.

The first step is to come up with some clear guidelines, not this clown porn blue checkmark buttocks mishmash of words. The next step is to find a way to consistently apply the rules. The third step is to give clear notifications to users which content penalizes their account and how long that penalty lasts. If you want users to use less content that you don't want on your platform, you have to let them know when they violate the rules and what the punishment is.

One thing I think may be happening here, and it happened to me. Assuming your promotions are listed most recent to oldest, you've been label a "troublemaker". Read on. The last two images there (which I assume you tried to promote first) - the pole dancer and nude under sheer fabric are borderline. I mean they're all fine - but think of someone's 65 yr old grandma who goes to church 3 times a week. She'd call them porn/nudity. After doing that, your account got put in some group of "adult content creators" or something along that line and anything questionable was going to get denied. Since they don't actually look at these photos and use some sort of machine learning AI to tell, then the rest of them were labelled adult because if you squint your eyes, that could be nudity. I'm also pretty sure that if you appealed the rest of them, you would be allowed to use them. I ran into this a while back trying to promote a boudoir Christmas special that included a photobook. Promoted photo of girl in lingerie holding the book - denied, said to focus on my product. Tried again with a crop of that photo, with book filling 80% of frame, with bra above and legs below -- nudity/adult content. Finally just promoted a photo of the book by itself, open to a page of a girl in lingerie blurred by low aperture -- still adult content. At that point, the time frame that I had wanted to run the ad in had passed so I just moved on. But it sounds just like what you have had happen to you, the more repeated offenses, the pickier they get.

The order was: black dress, face crop of girl in the water, girl in the water lying down with the sheet (happens to be the same model), pole fitness, and then Victorian dress. The problem with the hypothetical grandma is that the algorithms are designed to keep those things off her page anyway. Further, if there's a 65 year old puritan grandmother on Instagram, my page is the least of her worries. My pictures don't come close to breaking the rules. If they wanted to change the rules to: "We no longer allow models who are dressed 15% more than the US Olympic Women's Volleyball Team. it must be 30% more. And if you post a picture of sexy fitness things, we are drawing the line at Bikram yoga, no pole fitness at all." Instead, they have rules, I stay on one side of them, but I have secretly broken the hidden rules that no one has access too, which makes me secretly punished for an undetermined amount of time, and carries over to pictures that are clearly in no way a violation even by the strictest Amish standards, and that stain on my account will carry over in secret indefinitely.

The black dress picture was from a year ago and I appealed it and the appeal was rejected.

It's all lazily machine learned. Trying to understand the rules will just give you a headache.

The only way to explain this dumpster fire of policies on big data sites is to say that they're written that way intentionally, so that big data has the option to take down, or not take down, anything they feel like taking down, or not, at whatever moment they choose. They want their users confused, and fearful of reprisal.

Im confused... are you creators or creatives? Just wondering. Both terms are pretentious and wrong when applied in these contexts but what do I know.

Pretentious means pretending to have greater talent or knowledge than you actually have. So, to go to the comments and complain about members of a creative industry calling themselves creators or creatives because you believe it's incorrect is itself pretentious. The only thing that makes it less pretentious is admitting that you don't know what you're talking about, so good save there.

Instagram is full of half naked women and sexually provocative images, even has a bunch of women promoting their only fans account on there with teaser photos. I'm surprised any of your content got flagged.

Mentioning Onlyfans will get you banned fast, even as text in a picture.

I'll probably get myself banned on Instagram, but I have 23 images of fully nude woman on my samdavidphoto IG page, the only images I post. They were posed or cropped so that no nipples show, no pubic regions, no butt cracks, but there is no question these woman are fully nude. I have no idea why my images have not been removed and yours were. Same thing on Facebook, which owns Instagram. Most of my images have been allowed -- and there was no rational explanation for the two that were taken down (and which resulted in brief bans on my posting ANYTHING). Bottom line -- FB's and IG's computer scans of images and the algorithms that determine whether they "violate community standards" are as irrational as Zuckerberg's explanation of the erratic bans of political speech.

I currently have two ads running on my studio page, both of which feature cropped fully nude women where you can't see anything, but you know they are nude. So strange to me that my Victorian dress pics got rejected, but fully nude women did not.

Instagram algorithm is 100% garbage. It once marked a portrait of Audrey Hepburn (fully clothed and in her hat) to be "inappropriate". At the same time I've seen images of fully naked women in bondage in my feed and they were probably considered OK. It makes 0 sense.

Adam Mosseri is an a-clown.