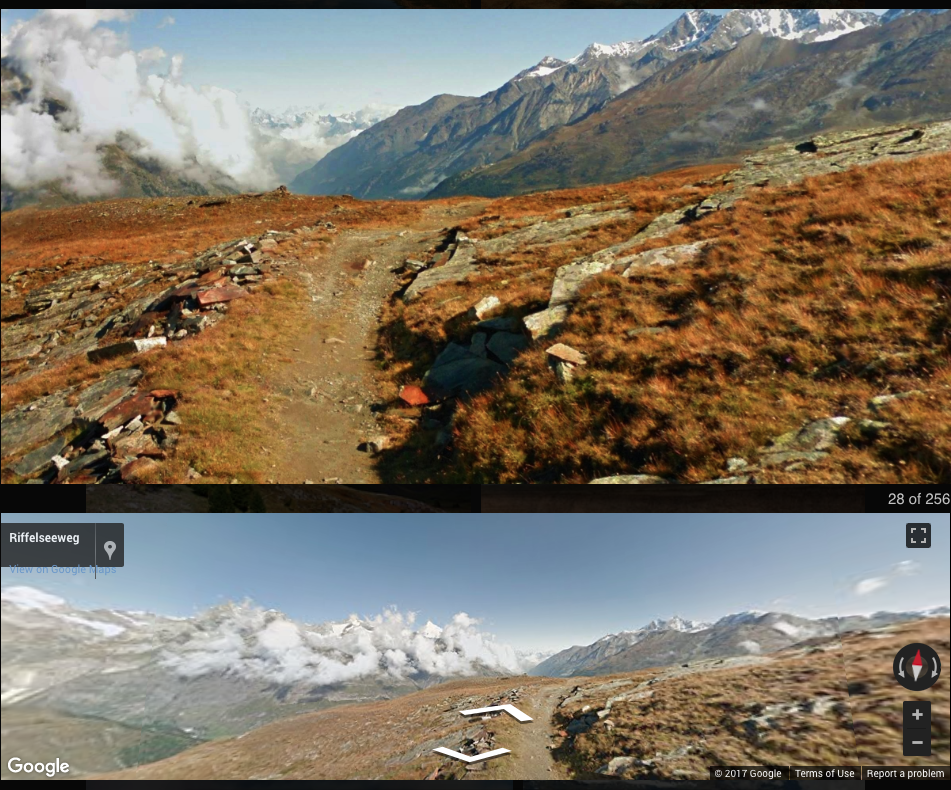

Google has trained their AI to go out using their street-view feature and snap images it calculated to work best for the task at hand. The calculation is based on multiple professional photographer styles, composition, and postproduction, and it's able to narrow down what "shot" would make the best photograph. It can then selectively enhance the image in certain areas that it wants the viewer to pay attention to.

It can apply contextual adjustments, meaning it's able to enhance certain parts of the image based on what it has learned as being important or visually appealing and important. It's not just a filter that's applied to the whole image.

I wouldn't really call it photography. It's more of a screenshot exercise of places that could make great images. It's also a good reference for what is possible in any given environment. But, ultimately it won't be me deciding what passes as photography and what doesn't. If you have an online presence and you display your images on your website for people to see and Google has exactly the same designed website but the images are of its AI, who knows what the general public will choose as being the photographer they would like to use for a project.

Would you ever upload a raw image you took for Google's AI to edit and process to give you an idea of what it thinks is the best rendition of the picture based on your style?

You can view more of the pictures in the gallery and you can read about the research and development in the white paper published by Hui Fang and Meng Zhang.

[via The Next Web]

I would never upload a raw image I took for Google's AI to edit and process... the art of art exists in the process, handing that off to a machine eliminates that aspect of the creative process.

yea but same day delivery is great =D but I guess they can teach it to copy your style and it'll make your work faster.

Today this. Tomorrow Skynet becomes self-aware.