DALL·E 2 is a new artificial intelligence system that can create realistic images and art from a written description. No more elaborate styling and lighting setups: you can now just pop in a description of what you want, and DALL-E-2 delivers the image. Too good to be true? Too threatening to the forever-taking-hits photography industry? See for yourself with my prerelease test run.

DALL-E-2 expresses in its mission statement:

OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity.

If you're a photographer who feels like modern technology is constantly taking hits at your ability to be an indispensable player in the marketing industry, this statement is surely one to make you cringe. I was granted pre-access to the artificial general intelligence (AGI) platform, and I took it for a test run. Can it really do what we can do? Can it even "outperform" us? Is it a threat to the photographer? Is it a resource? Or is it a combination of both? Let's have a look.

There are a few functions of the software. The first, and the one it's most known for, is that it can generate an image or artwork based on a description. On their Instagram, for example, you can find the result from "a blue orange sliced in half on a blue floor in front of a blue wall"

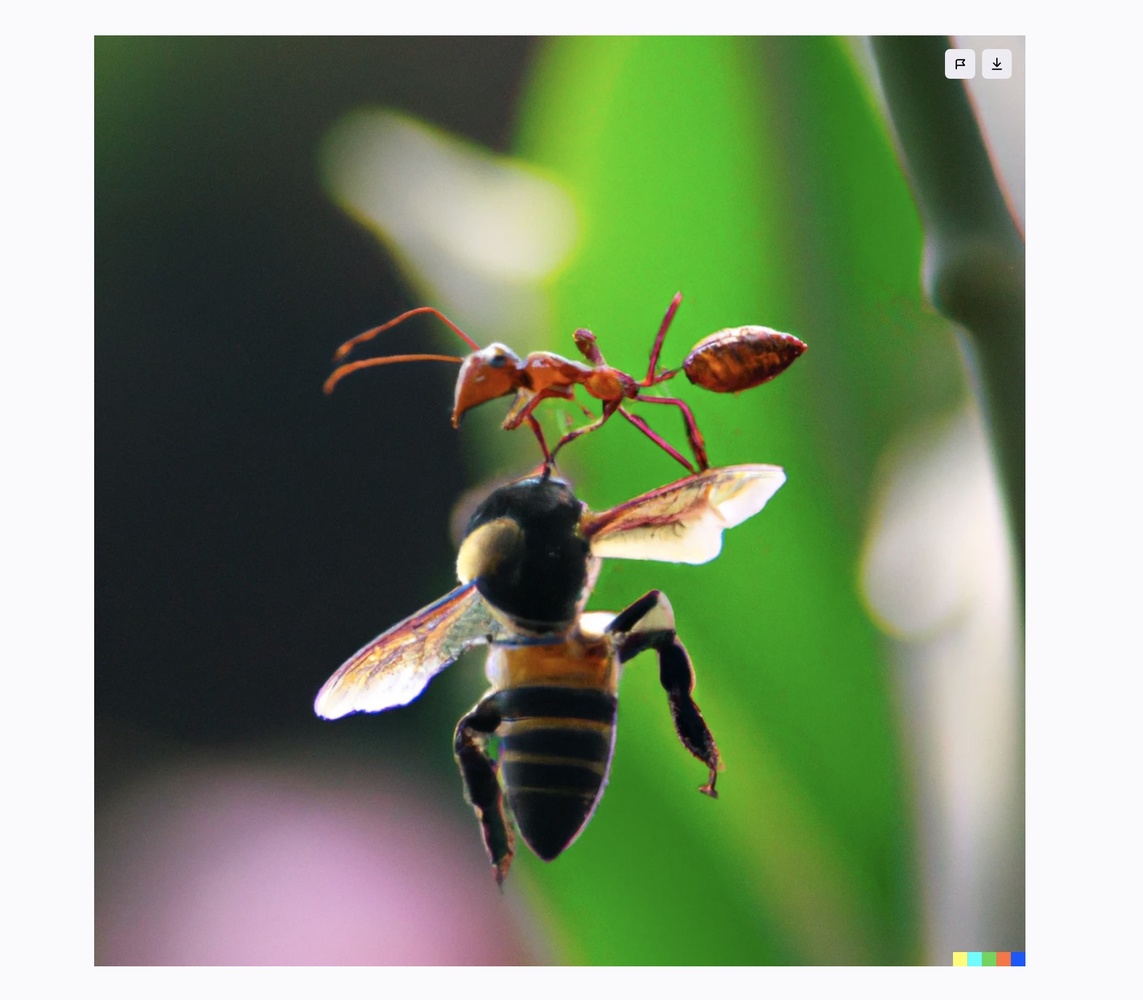

Anyone can agree that the result is quite mind-blowing. I even took a shot at a random description myself.

There's no denying that the technology is impressive. However, my intention in pre-testing it was to find out if it could do a professional photographer's job. Could a client, rather than hiring us, type in the description of what they wanted and skip the expense of hiring a pro?

Test One: Are the Images Generated on Par With Those of a Professional Photographer’s Work?

My first test was to see if DALL-E 2 could generate visual content that could compete with the images I was working on at the time. Case study one: a chocolate made with cocoa and dates. I typed in the description of the image I had created that morning: "a date with chocolate sauce poured on it."

These were the results:

I suppose if you just needed a picture of dates with chocolate, this may do. However, if you had any consideration for lighting, composition, color correction, or aesthetics, these images would not meet my standards.

This was my shot for the assignment of chocolate drizzled on a date. @michellevantinephotography

Next, I decided to throw a model in the testing. The brand had once done a shot where a model drizzled chocolate on her tongue, and it was a very successful image. Along those lines, I typed: "A beautiful woman with chocolate drizzled all over her body."

My first observation was that it seemed artificial intelligence has selected caucasian brunettes as their depiction of quintessential beauty, so I guess I'm out of luck! My second observation was, as in the previous test, that the aesthetics of the images were a complete failure. It looked more like a scene from a Freddy movie than an ad selling chocolate and lust. The software impressed me in the sense that it could magically generate images from a brief description, but it quickly became clear that it was in no way capable of creating a cohesive set of aesthetically successful images.

Images by @michellevantinephotography

Test Two: Can the Correction Features Be an Asset to the Photographer?

You may have seen DALL-E 2's nearly implausible results of the AI-corrected blurry ladybug as seen in this Tech Times article. I decided to take a shot at these features as well. My first trial was to remove a shadow and fill it with a patterned background. I suppose I jumped right into the deep end.

Once I uploaded my image, I selected "Edit Image," and I typed in "Remove the shadow of the skincare bottle and fill it with the palm leaf shadow". I was unquestionably impressed with the images it generated.

It significantly outperformed Photoshop, which couldn't match the palm pattern.

For the amount of criticism I've dished out so far, I really have to tip my hat off to the software on that one. Next, I tried another real-life scenario. Once, my salsa client asked me to swap out the red peppers in the image below for jalapeño peppers. Needless to say, I had to re-shoot it. Impressed by DALLE-2' s last correction, I decided to see if it could accomplish the task.

"Change the red peppers to jalapeño peppers."

(crickets)

"T, To May!"?... and the peppers are still red.

A clear failure on this assignment.

Test Three: Can Dall-E-2 Effectively Add Elements to a Photographer’s Image?

In my product photography, I'm often doing a lot of splashes and crashes. My final test was to see if the software would be able to do some of that work for me. Inspired by the images I shot below, I asked if it could add chips to a background.

Image by @michellevantinephotography

Here is the result for "Add tortilla chips to the background."

I also asked the software to add more water ringlets to a shot.

Below is the result for "Add a splash of juice to the background."

The test above generated no splash and some interesting alternatives, like a blurry pineapple creeping in.

Conclusions

After putting DALL-E-2 through a myriad of challenges, it was clear that the software had not yet met its mission to "outperform" a professional photographer. Though the software is an incredible feat, it doesn't consistently deliver what it's asked. If it does, the aesthetics of the image are not up to par. I did find myself surprised at the repair job on the palm shadow, and I wonder if it will position itself as a more advanced tool than Photoshop.

What are your thoughts about this new technology aimed to "outperform humans at a most economical value"? Share your thoughts below.

I agree with the article that it's not quite there, but I do like the concept, I could take this into photoshop from here and make it marketable. This will absolutely put some photographers and graphic artists out of biz. After the first article on FS came out I applied for a user name, below are some of my results. If I was a camera manufacturer I'd be worried, as a consumer of cameras I'm worried that innovation could be stifled. Why Should I buy a camera if AI can produce it, why should I pay a model if I don't need one, hell why do they need me....? Elon Musk is correct, AI will be transformative in every industry. Dall-e can be a great asset creator to layer backgrounds, edit, do tedious tasks, scary really as it has advanced dramatically and will continue to do so going forward.

Good point that it's a great starting point. I reached out to the company to get more information regarding the copyright of the imagery and they haven't replied. That will be another interesting component

The software is still in its infancy. My understanding is that its renderings are based on a database of hundreds of thousand, if not millions, of photos it has indexed. At this point in its development it's probably a case of simple description, simple picture.

In its defense, in the images with the model and the chocolate it probably decided that the fair skin and dark hair of the model showed off the chocolate, i.e. the product, better than a darker skinned model. Without knowing the inner workings of the algorithm it uses to design and render its images it's impossible to say. Don't ascribe malice where it may be a simple case of lack of imagination.

This is a good point and may very much be at play, or it may be that you are rationalizing why these patterns are ok - we wouldn't know unless we had lots of samples and understanding of the algorithm as you said.

This is partially why its silly to call these things AI. What comes out of these algorithms are still a reflection of human value judgements, which includes 'deciding' that showing off the chocolate is automatically better if its on lighter skin and darker hair; one may sub-consciously agree with that logic which is why it doesn't even beg questioning, but that is still inherently a human-based value judgement, and we humans are wildly diverse in perceptions :)

I don't have access yet but I am on the Dall-e 2 Subreddit. There's been a lot of complaints on there in the past few weeks about diversity being added to the AI. It does weird things now like NOT show people in the 'expected' races or genders. There was a user who was consistently getting black cowboys when he wanted a simple photo of a cowboy on a ranch. But when he changed it to cowgirl, she was white.

There's definitely been some diversity tuning applied, but the system is so complex I don't think it's giving the desired results.

That's interesting. I only did that one test which included people. That would be an interesting component for the developers to keep at the forefront on their minds

It was more of a comic relief insert than a jab. I can see your perspective about matching the brown hair with the brown of the chocolate

Not clear to me where the initial images used for these AI come from. Owners of borrowed images should be legally mentioned with each use and the author credited.

There are no initial images. It 'looked' at a millions of images as reference, and was trained to learn what these things were. But if you ask it for a photo of a woman with glasses and a half-smile, it 'imagines' her from scratch based on what it 'understands'.

I have no experience and no access to these programs to make my own tests and evaluations. One thing that bothers me about it "imagining a woman from scratch" is the way the models with chocolate look on the images above. It really appears as if the contrast and colors are specific to a shoot for each of the three images to the right. It's like as if it picked an existing and real image and modified what ever it needed to but certainly did not start from scratch. In fact, this is a fairly new technology and I would be extremely amazed if they got to this level of complexity in such a small amount of time without a massive real images archive. I'm not saying that the process is not phenomenal, but it's very unclear how it starts each new rendering.

To a computer, any image is just data and without that data it can’t do anything the way we understand images. Without any stored data of what an airplane looks like and the proper key word association, it won’t generate that airplane sitting on a banana. Same way, you can’t have one data/image of a single airplane or even just one angle stored for the program to make a decision on what the image will look like. Fact is, the plane has to look real in the end. So to me to make this rendering system work, each time a person enters the key words, there is definitely storage of real images on servers involved in the process. I am not sure of what “looking” means in binary without involving some level of storing. As humans, we can look, make sense of and sort of memorize, but none of it is visually perfectly frozen in time. An example would be my daughter telling me that her friend is picking her up with a blue car in front of the house. I don’t need to know the friend, her or his face, the brand, the model of car or if there are cloud in the sky. Instantly I have the scene in my mind and that’s enough info. I didn’t have to store any detail and break them down. On the contrary, these programs do need the specific values turned into visually realistic (to us) data, otherwise you may get a super blurry image, may be just a blue image, but very likely something just grey.

If I want an Ili pika driving and crashing a Mercedes race car in the fifties a for example, there is no way the images are not alterations of someone else’s pictures. And this would happen without these photographers knowledge. Granted, my example is fairly specific but not that crazy for this type of AI image creation.

At Openai.com, they state this: “We also used advanced techniques to prevent photorealistic generations of real individuals' faces, including those of public figures.” I look at it two ways, they make sure to protect themselves against parts of sourced photos being recognizable, or, as they say, it’s to protect people. Either way, they demonstrate concerns which to me isn’t eliminating the possibility that real images they don’t own the rights of are being used. I mean, if data is really random from “looking” what are the real chances that AI would create a model that looks identical to an existing person. Sounds like winning the lottery would be piece of cake.

I still think it’s kind of an interesting program.

From my understanding Alex Herbert is correct. It's a combination of various imagery. Some things that are of interest to me are 1) how to they acquire the hundreds of thousands of images from their base? 2) Who owns the copyright of the final images? I contacted the platform twice for comments and they did not respond.

That's my point, there is nothing telling where the images are coming from and very few people apparently questioning it which is even more surprising.

Another very weird thing to me is the fact that I haven't seen any one commenting on image resolution. I haven't read anything on image size.

Many people ARE questioning it, and there's info online about how it all functions https://www.assemblyai.com/blog/how-dall-e-2-actually-works/

You mean it didn't auto-reply... lol

This may help to shed some light on what may be going on? We are currently facing a major shift in the law regarding the licensing of images and their usage here in the UK which could have a major impact on all creatives! https://www.aopawards.com/ai-data-mining-and-what-it-means-for-you/

Aidan Hughes what an honor! One of my favorite product photographers. EXCELLENT reference here. This sentence from it was very striking "With serious economic consequences for any creator, but most especially photographers with data-rich images, this proposal completely short-circuits the licensing process allowing AI developers and others free commercial access to content for which, under normal circumstances, they would have to license and pay for. " Very very interesting. Thank you for sharing this ! I may have to write more about this

You're too kind. Yes, it will be interesting to see where this all lands. After playing around with Midjourney for a bit I am excited for the possibilities it introduces to us creatives. However, it's a little scary to know that there is a danger of losing the rights to our own I.P. It definitely has the potential to become a double-edged sword. I guess time will tell.

Appreciate the article and i've been curious about midjourney, Dall-e, among others. I think they can be great tools and innovations for people of various expertise. It does put into question the future of many creative industries (im curious for the sound/music producing machine learning tools to start popping up).

However, i think there is a deeper thing here that is easy to miss, because AI is becoming common language, it produces some misconceptions. A genuinely real AI is not going to care necessarily about your keywords, an AI will by nature possibly choose to express its own vision and renderings of its experience, desires, etc., and would arguably have license to its own creations - This is a far cry from the machine learning tools that we're talking about, but the marketing trend is to call it AI anyway.

With that point out of the way, it is impressive what these algorithms are able to produce, though these tools are still relying on human generated indexes, coding, and input. Actual artists may need not worry yet, as they are the ones who still get to produce their vision of the world, it does bring into question who owns or deserves credit for these current 'AI' generated creations though.

Excellent feedback JR . Intrinsic in the concept of AI is the independence of its interpretation. I had questions for the company about the copyright of the images once they are produced but I did not receive a reply from them

Let's all start bringing our cellphones to engagements and weddings. I mean people tend to think that phones are better then an actual camera so they should have no problem letting us use our phone to photograph there big day.

It has its uses, especially for quick-and-dirty mockups. But the fundamental limitation is that spoken language is VERY different from visual, so there's a whole lot of variability in what you'd get. Like entering a bunch of keywords into Getty, you'll get a wide variety of valid images, most are not what you're looking for. Or like describing a suspect to a police sketch artist (they should make a specialized variant of the software for that purpose)

It may change some things, mostly generic images required for something, mostly for advertising... but even now much product advertising copy uses computer generated images already often without actually photographing the product.

Excellent summary and I agree on all points

Something that I've noticed about Dall.E and MidJourney is that they understand words in a very specific way. As with any computer system, we need to acknowledge that Garbage In = Garbage Out. We also need to understand what the software is doing with our input and analyze whether our input needs to change, for us to get the result that we expect.

While it may seem like a natural language input should work as we understand language, I've found that it doesn't always do what we expect. For example, when I asked for a moody image of a man, I got an image of a moody man.

So, to get the image that I want, I need to adjust my input. I think that these tools are very capable and that we need to explore and learn their capabilities as we have with other tools in our arsenal.

I've been using Photoshop for nearly 27 years, but I've been using these AI tools for just a couple of days. They're going to grow in amazing ways, and we need to keep up and learn how to use them to produce the results that we want.

This is an interesting perspective Susheel Chandradhas I would have loved to get a quote from them on the copyright ownership of the images produced. It's a whole new world they're dipping into.