As some of you may have already known, we were off to somewhat of a rocky start when we launched this new website. For a recap: we ran into a few load issues right before our switchover deadline, so we decided to postpone the launch for nearly 22 hours before launching it again. We have been monitoring the servers very closely and working nonstop to squish all the bugs ever since. Now that the dust has settled, I'd like to talk to you guys about how we set up our servers on Amazon Web Services - the cloud.

This post may get a bit technical, so please to skip to the next one if you don't have any experience with system engineering.

When Patrick and and Lee told me they wanted to create a new website where we would get thousands of people sign up and use the Community daily, I knew our then-existing dedicated server setup wasn't going to cut it. Given that it'd need to scale based on the usage, the only thing that would fit bill would be AWS.

With AWS EC2, you can essentially set up your webserver farm so that it'd scale out when there's a lot of traffic, and scale back in when the traffic dies down (more on this later). AWS also offers other services like Virtual Private Cloud (VPC), Relational Database Service (RDS), Elastic Load Balancer (ELB), Route 53, ElastiCache, Simple Storage Service (S3), and Simple Email Service (SES) - all the things we ever need to run a website. Here's a quick rundown of what each service does:

- VPC: when you sign up for an AWS account and start using the service, Amazon automatically creates a VPC and assigns it to you, with your own subnet. Our subnet mask is 255.255.0.0/16 (yes, that's 65536 possible internal IPs). Basically, this is like being able to access all the computers in your home network or company. Pretty neat.

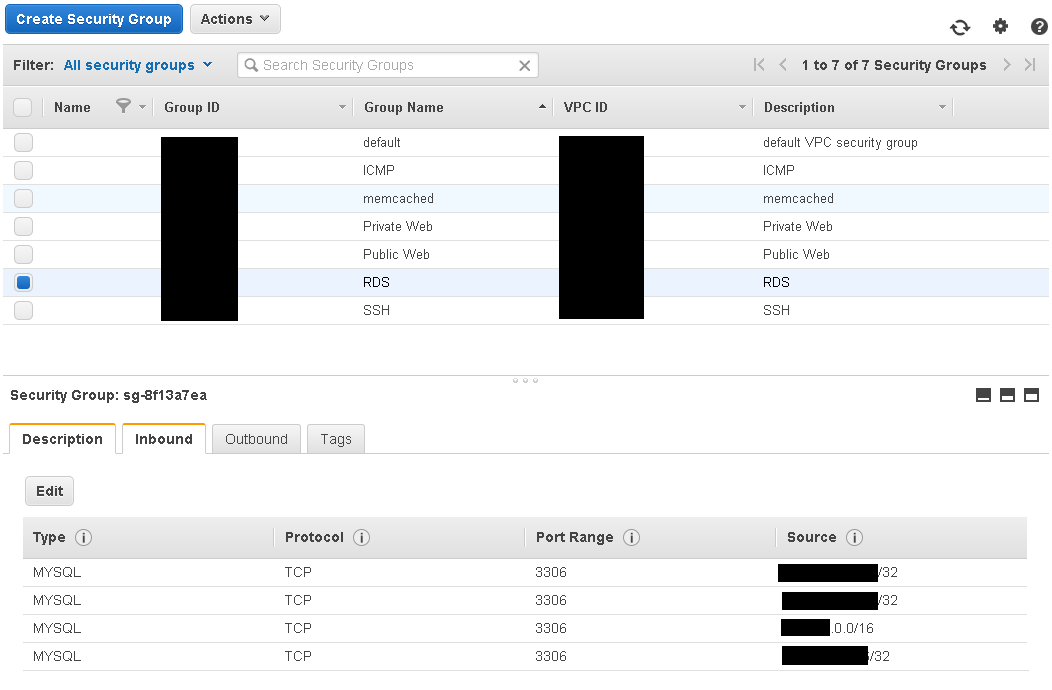

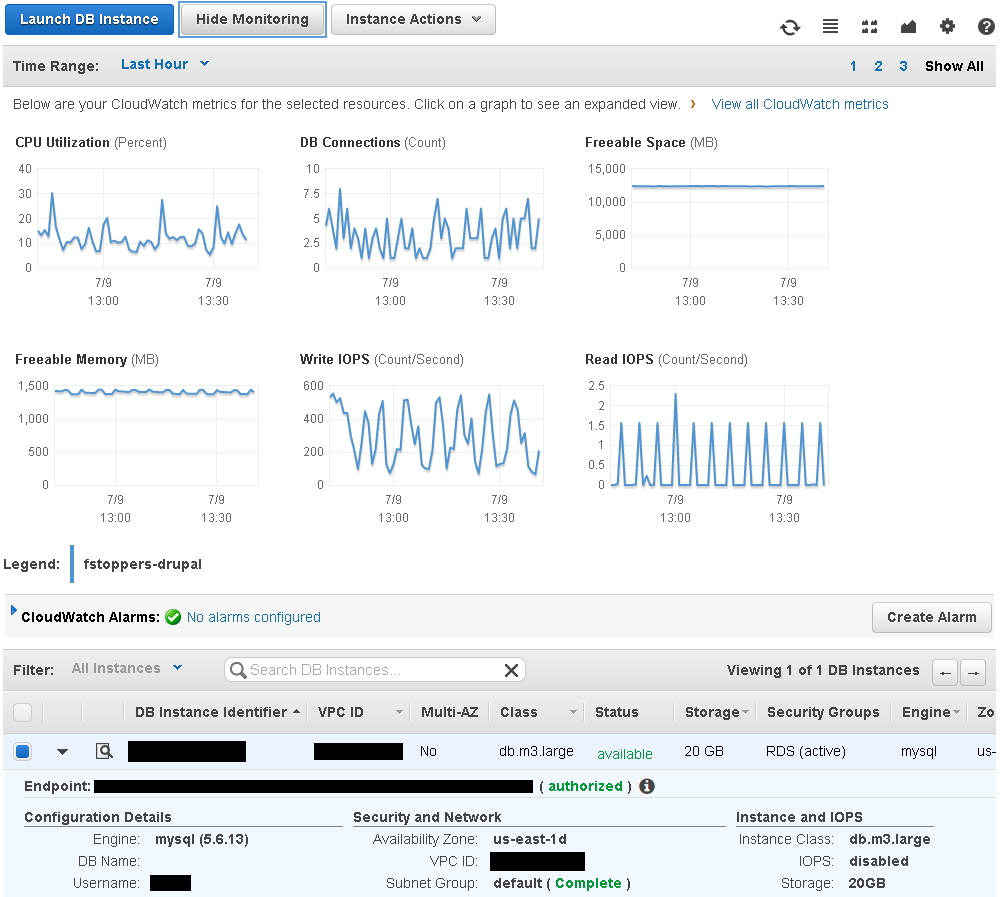

- RDS: we use MySQL for our website, so this is a must. With the help of AWS Security Groups, we can set this up so that only our EC2 instances (aka servers) can access our RDS instance. RDS is scalable. Right now, we're running on one m3.large instance.

- ELB: we're running a multi-webserver setup, so this is a must. Incoming internet traffic will be routed to one of the instances, Round-robin style. Each of you will be assigned a "sticky" cookie that would make you stick onto an instance for an hour from your last visit.

- Route 53: since AWS ELB can only be accessed using a CNAME, we have to use AWS Route 53 as our nameserver. Basically, our root DNS record (@) for our top level domain (fstoppers.com) has to point to a CNAME, which is illegal (unless of course we use Cloudflare). When using Route 53, Amazon has the ability to point our root record to an alias, which is a CNAME. The only downside with this is the time-to-live for the record very short, which isn't a very huge deal.

- ElastiCache: we use memcaches for our website - one for sessions, and one for content (by the way, if you sometimes get logged out when you come back to our website, it may be because we had to restart our session cache while pushing out new code). You simply can't run a website this big without cache. AWS ElastiCache is scalable. Right now,we're running on 1x m1.medium and 2x m1.small.

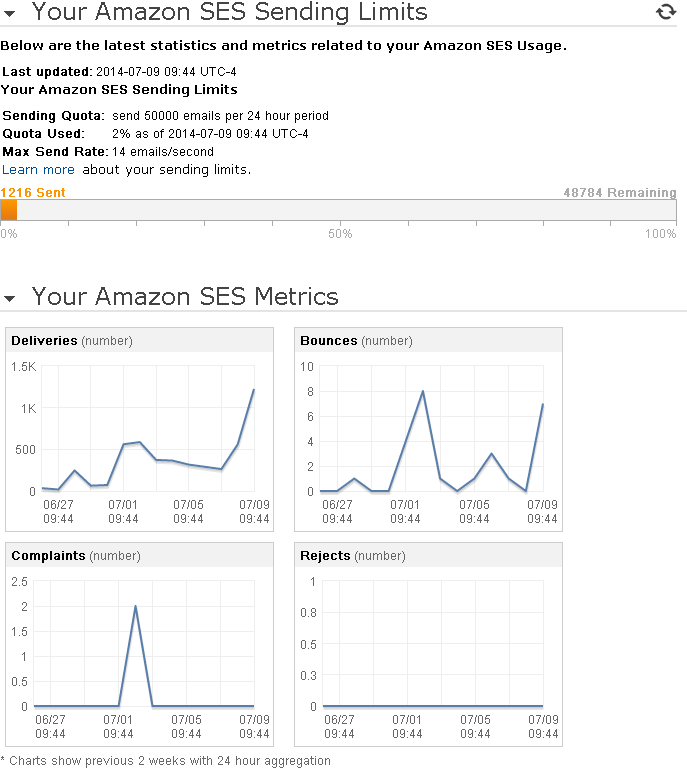

- SES: when you set up your webservers on AWS EC2, you have to keep in mind that these instances come and go, and so do their IP addresses. For any entity to send out emails as coming from a permitted sender, you have to set up your reverse DNS with your ISP so that when an email provider looks up, for example, jimbob@fstoppers.com, fstoppers.com has to resolve to a static IP. Since our IP addresses are dynamic on EC2, that can't happen. SES is a solution for this case. SES is free when you send below 2000 emails a day, and those emails must come from EC2 instances. Just make sure you create your DKIM and SPF records!

- S3: all of existing images from the old server have been migrated to S3, and all the new ones that are uploaded by our writers and users will be saved on S3.

- EC2: basically, you can create and destroy any servers with only a few clicks on their console. With our current set up, we would work on our development environment. Once the code has been tested, we would then deploy it to our production server. The deploying process goes something like this: save our work, shutdown the instance, create Amazon Machine Image (AMI) based on the development instance, create new production instances based on the newly created AMI, add new production instances to ELB, remove existing instances. Right now we're running on 2 of m3.xlarge instances.

As you can see, things may get a bit tricky when these instances come and go. First, we have to assume that nothing will ever be saved on those instances. We may need direct access to get on those instances to inspect the logs at times, but images and other media files will have to stay on S3.

Also, since these instances only talk to the ELB, every traffic will look like they come from one source. We had to hack the web service to display the correct source.

AWS ELB also supports SSL, so all of our encrypted HTTPS traffic will be decrypted at the ELB, and then routed to the instances. We plan on rolling out HTTPS-everywhere in the near future. Right now, we're still focusing on fixing bugs that have been reported by our users in the Facebook group.

Please understand that there are a lot of moving parts to our setup, and the codebase is huge, so things may or may not work properly within the first few weeks/months. We're constantly working on fixing bugs and developing new features. If you have any questions or suggestions, drop us a comment below or post it in the Facebook group and we'll make sure to get to it as soon as we can.

Fuck Yeah!

Can't touch this.

Wut?

Yeah boiiiiiiiiiiiiiiiiiiiiii

Great write. Now it should give people a little insight on what it takes to run a site like this.

Tam da wizard

Magic. Nice job on everything!

i not understanding

Great insite Tam, quite the project and a job well done.

Curious though, with all the abbreviations you had I didn't see WTF? anywhere.

I tried, but I really couldn't incorporate it into a sentence :)

Really? I think you're just being modest. I can do a search in the beta group for you. HA HA!

Oh man... I know I could go buckwild at times :)

Wow....very impressive. Who is writing all the code? What languages? How long did it take to code this entire new format with all the features? Again..very impressive that this is so seamless.

Just to put it in perspective and I have no idea what your budget is, but I see so many more egregious defects going out daily from VERY WELL PAID developers in much more "serious" environments compared to any problem I have ever encountered on FStoppers sites.

Kudos!

Thank you so much! Our in-house code monkey is Joseph Pelosi. The app is written in PHP. I think we started doing this some time late last summer, so it's been about a year.

Well congrats to both of you then. I work on trading systems for a major trade-floor here NYC and rarely are our implementations so seamless....and this is an environment where seconds/minutes of downtime can cost us thousands of dollars as the market may move against us.

Why didn't you just say that you run it with The Matrix? I mean....that's what the cloud is right? Right guys?

Did not expect to find so much awesome tech info in Fstoppers this morning. What a pleasant surprise! Very interesting

I'm glad you can understand this :)

The only words I could understand in this article were prepositions, articles, and Amazon... (But I know other people can get it and love this stuff. Looks thorough, Tam. Nice work! :-))

Words!

You lost me at "hello."

Since I'm a visual person, my only take away is that at some point, Fstoppers' traffic goes through a flower pot.

Very nice. One question...can you get at your data (or backups at least of it) if everything amazon wise goes down (i.e. are your eggs all in one basket)? Highly unlikely scenario (Amazon going down) but just a question I immediately thought of looking at your diagram. Obviously you don't want to disclose too much to the intrawebz and all but just figured I would ask.

There's no need for any backups anymore. Every image is saved on our AWS S3 bucket, the code is checked in a repository, and the entire instance image is created each time we deploy a new version. Even if we lost the image, we can always build a new one and grab the code from the repository. RDS is automatically backed up daily, with 14 days retention.

Amazon cloud really makes it easy for people like me :)

That really quite amazing Tam. Between you and Joseph and whoever else was involved, it's a massive accomplishment. Can't wait to see whats coming in the next 12 months.

Thank you sir!

Even your databases?

^Guess that was what I was originally asking. Thanks for explaining the other though.

Yessuh. You can set what day and time for AWS to create snapshots of your DB, and it can do it without downtime at all, all via the console, so there's really no manual work or coding involved.

It would be great if you can put CloudFront out infront so that those located in far flung locations can retrieve the content faster.

That could be arranged. Just let us know where we can send our monthly bills to.

Thats what a love... and hate.. about AWS. You can do so much, and the pricing looks small, but it all adds up.

One way to potentially speed it up for those outside the US without increasing cost (in fact it would reduce transfer cost out of the AWS region/availability zone) would be to compress the content (gzip). As long as you aren't trying to support really old browsers without gzip support.

Its a great site so far, and been enjoying stumbling on new features as i further explore. Well done guys

Yes, AWS bills can really add up, unfortunately. That's the cost of cloud scalability.

We initially served existing photos (from the old server) and CSS/JS files from the instances, but we realized it wasn't making much of a difference, since our S3 bucket and the instances are from the same region, so cost/speed would be the same. We decided to use S3 for everything to make the instances completely stateless and volatile.

We did look into Cloudfront, but since we have SSL on the site, and we want to keep that lock all the way green, supporting HTTPS would add $600 a month just to keep that green lock, not including serving the media to and from all over the globe. In fact, years ago we did turn it on while we were using Wordpress, and it nearly broke our bank.

I'm glad you enjoy the site so far. We have a lot of things on our todo list, and we're trying to knock them out one by one. Stay tuned!

Any reasons why you guys went with aws vs azure?

I'm a diehard Windows fan, but I don't trust Microsoft. At least to run our business on.

Radical..