Instagram's censoring policies make no sense at all. But the least they could do is not call women prostitutes based on what they are wearing.

Instagram's Policies Make No Sense

As I mentioned in a previous article, Instagram's Community Guidelines on nudity are very confusing. Look at their rule about butts in the list of things that you should not post:

Visible anus and/or fully nude close-ups of buttocks unless photoshopped on a public figure.

For some reason, the world is only safe if you post zoomed in fully nude photoshopped buttocks shots of public figures or possibly visible anus shots photoshopped onto public figures depending on how you read that sentence. Having a rule about how close you can zoom in on a fully nude buttocks is one thing, but what about weirder things like bestiality? You'd think that would be a hard pass, but you'd be wrong. On the day after Thanksgiving 2021, while we were all slowly digesting turkey, the committee of nudity guidelines at Instagram changed their policy to add a new category of things they allow but will age restrict:

Imagery depicting bestiality in real-world art provided it is shared neutrally or in condemnation and the people or animals depicted are not real.

Luckily, they keep a change log, so you can see what they add, what they take away, and when. That was added in the November 24, 2021 update to the nudity policy.

Instagram Uses AI to Scan Posts for Policy Violations

Instagram describes its policy on its blog here:

Artificial intelligence (AI) technology is central to our content review process. AI can detect and remove content that goes against our Community Guidelines before anyone reports it. Other times, our technology sends content to human review teams to take a closer look and make a decision on it. These thousands of reviewers around the world focus on content that is most harmful to Instagram users.

and here:

How technology detects violations

UPDATED JAN 19, 2022

People on Facebook and Instagram post billions of pieces of content every day. Even with thousands of reviewers around the world, it’s impossible for them to review it all by themselves. That’s where Meta artificial intelligence comes in.

It's like this, except they only scan images of women.

The system is then verified and trained by humans:

When we first build new technology for content enforcement, we train it to look for certain signals. For example, some technology looks for nudity in photos, while other technology learns to understand text. At first, a new type of technology might have low confidence about whether a piece of content violates our policies.

Review teams can then make the final call, and our technology can learn from each human decision. Over time—after learning from thousands of human decisions—the technology becomes more accurate.

Instagram's Policy on Sexual Solicitation Seems Reasonable on Its Face

Here is the policy from the community guidelines page:

Do not post: Content that offers or asks for adult commercial services, such as requesting, offering or asking for rates for escort service and paid sexual fetish or domination services.

Content that is implicitly or indirectly offering or asking for sexual solicitation and meets both of the following criteria. If both criteria are not met, it is not deemed to be violating. For example, if content is a hand-drawn image depicting sexual activity but does not ask or offer sexual solicitation, it is not violating:

Criteria 1: Offer or ask: Content that implicitly or indirectly (typically through providing a method of contact) offers or asks for sexual solicitation.

Criteria 2: Suggestive Elements: Content that makes the aforementioned offer or ask using one or more of the following sexually suggestive elements...

In sum, don't post things that directly or indirectly seek to arrange for prostitution. Indirect sexual solicitation requires a post to both 1) ask for sex in exchange for money, and 2) contain suggestive elements. If it does not have these two elements, it's not in violation.

Instagram's AI Scans Posts of Women And Determines if They Are Asking for Sex based on What They Are Wearing

Scanning a post for nudity is one thing. It's a simple training of the AI to see if the zoomed-in fully nude buttocks is photoshopped on a public figure or if the nipples are on a man or woman (female nipples are not allowed, but male nipples are). But that's not what Instagram is doing. It's reading pictures and the captions and determining if the woman in the photo looks like she is asking for sex based on how she is standing or what she is wearing. It will do this regardless of what the caption says. I know because it's happened to me over 20 times. It's happened to numerous friends and colleagues as well.

Here's an image I posted in my stories that was removed because the women were apparently asking for sex in exchange for money. A lingerie company I work for posted my pictures on their feed every day for about two weeks and I posted a screenshot of the feed and Instagram would have none of it:

Here's one image that was deleted of a woman standing by the window. I can't show you the image because it was deleted, but I can assure you she was not asking for sex. Here's the caption of that photo that is still available for me to see in my account settings, where it lists my removed content:

The important thing about this particular image is that I requested a review and a human who works for Instagram looked at it and still determined that the subject in the picture was in some way looking to engage in an exchange of sex for money. I've had two cases where the image was removed and reviewed by a human at Instagram and the decision was made that the subject was in fact trying to engage in prostitution.

My story that was removed did not have any captions other than my brag about "owning that feed." My other images did not have captions that came close to violating any guidelines. If the caption had nothing to do with it, then the decision was based solely on what the model was wearing or how she was standing in their determination of whether she was asking for sex. Keep in mind, these are not nudity violations. These are specifically Instagram believing that these women are trying to get people to have sex with them for money.

Your Options to Pursue the Matter Further Are Limited

It is virtually impossible to get ahold of an actual human at Instagram. You can always use the Report a Bug feature in the help menu, though.

On three occasions, I received a notification from Instagram that I could take my case up with the Oversight Board. The Oversight Board is a committee of human rights workers, politicians, educators, and corporate executives that review cases and determine if the cases of violations are serious enough to be reviewed as a committee to determine if there was in fact a violation. You can only appeal decisions that have a reference number. Not all decisions get a reference number. They do not state how they determine which case will get a reference number for review by the Oversight Board.

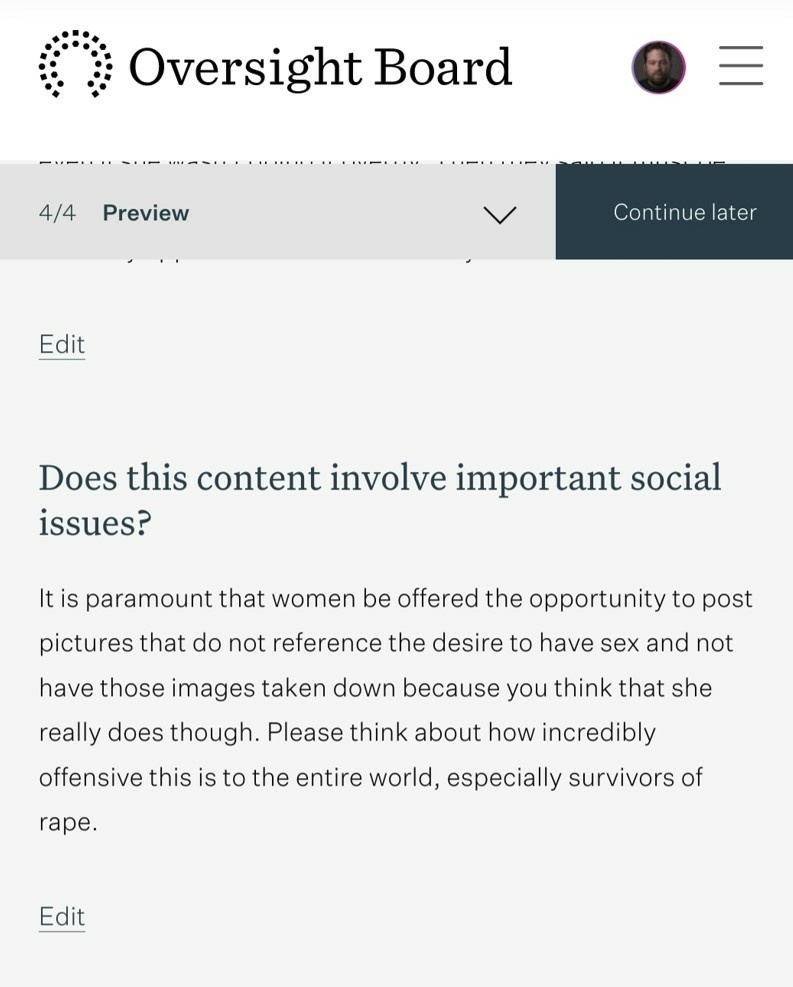

Once you go into the Oversight Board and enter your reference number, you have the opportunity to fill out information about your appeal and the issues you believe relate to your case.

They only select a handful of cases each year. Mine were not selected.

There's actual sexually explicit posts that show up on my Suggested feed and they're still up. Yet, Instagram goes after art or general nudity.

Examples of the sexually explicit posts:

https://www.instagram.com/p/CnQAMkLhg_o/

https://www.instagram.com/p/CmMu8tKP5Kd/

https://www.instagram.com/p/CmOKRvAI5aL/

Also, there are porn posts. Looks like folks are able to get away with it with reels. They'd use a porn image as a thumbnail. IG's AI is completely overlooking them.

Haha, I won't be posting links to those.

I have been getting ads on Facebook that show actual sex acts and I have no idea why I can't get ads approved and they do.

The whole approach is absurd. Just because a photo shows "nudity" doesn't mean it's strictly sexual or exploitative in nature. Scrolling through IG I see all kinds of photos of fully clothed or mostly clothed women that although they meet IG's good to go guidelines, they are in fact some of the most over the top sexually vulgar photos you'll see.

My next article addresses some of these issues. Thanks for reading!

Hopefully, Meta will relax their bare breast policies and less of these post removals will occur.

https://petapixel.com/2023/01/18/facebook-and-instagram-told-to-lift-ban...

my next article covers this decision in depth and what it will mean and what will stay the same

If you find the photo offending don't look at it. Before you know it our morals Police will be in the Art Museums covering some of the greatest art in the world because they find it immoral ....

This AI nonsense seems everywhere these days. Some companies deploy AI crap (chatbots) to replace real customer care. Horrible trend

Well, I think these algorithms are being programmed by people who obviously don't know anything about performing arts or photography. And whose secret wishes probably flow into the programming.

But there is another important aspect to consider: Most of the affected photos are posted by male photographers. If, in meta's opinion, these photos are intended for sex contacts, meta subtly accuses the photo artists of pimping! Hello meta, one could take legal action against this.