Recently, we've seen a lot of AI used in image creation, but so far, we haven't seen it as strongly applied to image editing instead of widespread image creation.

Yes, we can type in something like "create a lake with palm trees and an overcast sky", but now we're beginning to see early efforts of editing camera-produced pictures using similar techniques. Say (or type) something like "put some lightning and dark clouds in the sky," and your enhanced image editor will add that to your image. Apple is working on this technology with AI researchers from the University of California. In fact, the group has released an open-source AI-powered editing beta that anyone can try online.

The model is called 'MGIE', which stands for MLLM-Guided Image Editing. It uses natural language to describe changes to an image you want to edit.

There are details of the technology, released in a paper this month, that say an editor using AI could be more efficient.

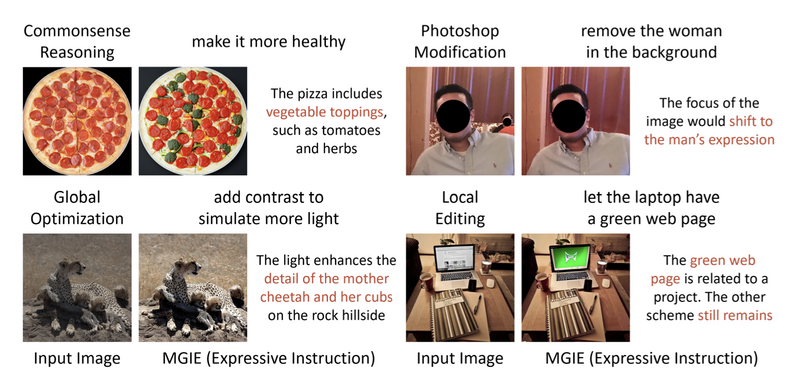

The research identifies four types of editing that can be handled with AI. Quoting the paper:

"Expressive instruction-based editing: MGIE can produce concise and clear instructions that guide the editing process effectively. This not only improves the quality of the edits but also enhances the overall user experience."

"Photoshop-style modification: MGIE can perform common Photoshop-style edits, such as cropping, resizing, rotating, flipping, and adding filters. The model can also apply more advanced edits, such as changing the background, adding or removing objects, and blending images."

"Global photo optimization: MGIE can optimize the overall quality of a photo, such as brightness, contrast, sharpness, and color balance. The model can also apply artistic effects like sketching, painting, and cartooning."

"Local editing: MGIE can edit specific regions or objects in an image, such as faces, eyes, hair, clothes, and accessories. The model can also modify the attributes of these regions or objects, such as shape, size, color, texture, and style."

Below is an illustration from the paper I've linked to above.

For example, you might type into an editor, "make this image more saturated," and the image would change. Typed instructions could also remove objects from an image.

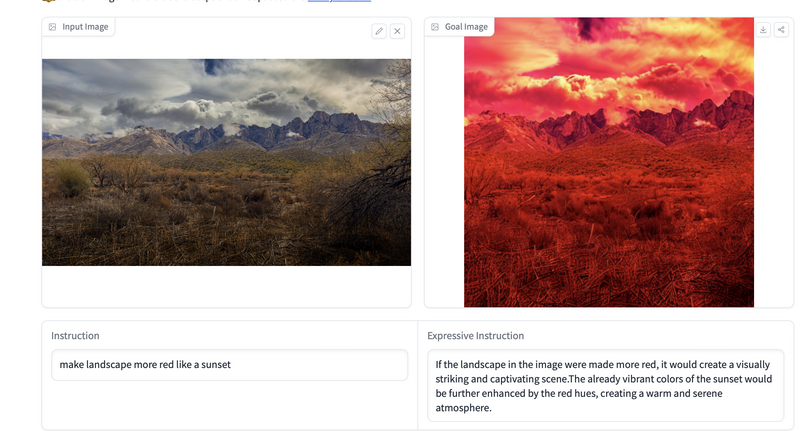

There is a rough beta online, where you can drag in your own images and type some editing instructions. I tried it with a landscape image, suggesting it make it more red like a sunset. I didn't find the result very satisfying, and my image was cropped as well.

But, it's a beta, and I tried some other examples that worked better. If you upload an image, be prepared to wait. A lot of people are trying it. It took about 30 minutes to get my image edited, but the webpage will show you where you are in line.

This could turn out to be very useful software if it is better and faster than manual adjustments. I suppose one could say "increase vibrance by 30%," but I'm not sure if it would be faster than moving a control on the screen. On the other hand, if it takes in speech directly or your typing quickly, I could see how it could speed up editing if it's really sophisticated. It also could have a future with handicapped editors.

Apple is going all-in on AI; last week's release of the Apple Vision Pro is one example, and Apple says Siri is going to get much smarter in upcoming software releases. Apple has hinted at some breakthroughs in generative AI, and this could be the first look at the fruits of Apple's efforts.

No comments yet