The images were taken at the same location on the same day moments apart, and I now have an image of the two together, which will be printed and hung on the wall as a lasting memory of that day's hike and of my girls together. Had I completed this the conventional way using masks, it would have taken me a bit longer to complete and I would have been able to do it, but thanks to the Photoshop Beta version and Generative Fill AI, I was able to do it in a fraction of the time.

Generative Fill AI

You are probably well aware by now of the new feature in Adobe's Photoshop Beta, as there has been plenty of videos and articles about it just like this. One of the first things that a lot of us will do when we first try it is extend the canvas and generate a fill to see how good it is.

If you've yet to try it out, the actual process is very straightforward and only takes a few steps to complete.

- Extend your canvas using the crop tool.

- Using the lasso tool, draw around the area you want to fill.

- Click in the add prompt box and leave this blank.

- Click Generate.

- In the properties panel, three options will appear for you to cycle through and select your choice. If none of these are to your liking, click the generate option again and another three will be created.

You can also save your ,PSD at this stage and the generated fills will be saved along with it, but remember, doing this will also increase file size.

Embellishments

Adding elements to images to embellish them is probably another thing that you will try, and again, it does this really well. The steps are the same to do this, with the exception of extending your canvas. The image below has five generated fills applied. The barn and the car, you can probably guess, but can you actually tell what's been filled?

Intuitive

The image above is a simple extended canvas from a composite I created (left). Simply by using a blank generate command, Generative Fill added the outer edges, even adding flames on the right. I mention this because if you look at my edit, I created a backlight glow from the fire to the knight's armor and chain mail, which is accented more on the right of the chain mail than the left, especially on the elbow and head. Generative Fill could have added flames on both sides due to the background flames, but it chose to only add them to the right. How the AI algorithm decided that this was the right choice to make, I do not know, but I was impressed.

Creative Ideas

Using the tool simply comes down to your creative expression and ideas. In the example below, I simply wanted to see how quickly it could speed up my workflow and then embellish the image. First, I removed the necklace by lassoing it and using a blank fill. Next, I tried to add clothes, which was the typed prompt, again by selecting an area. Out of the first three it generated, I chose the white top. Then came the embellishment, with wind-blown hair, and the image was created. Yes, it's not perfect and I haven't completed any post-processing after the prompts, but it was incredibly fast. If you have never added to the hair before in an image, believe me, it can be quite time-consuming getting the tones and look correct.

New Landscapes

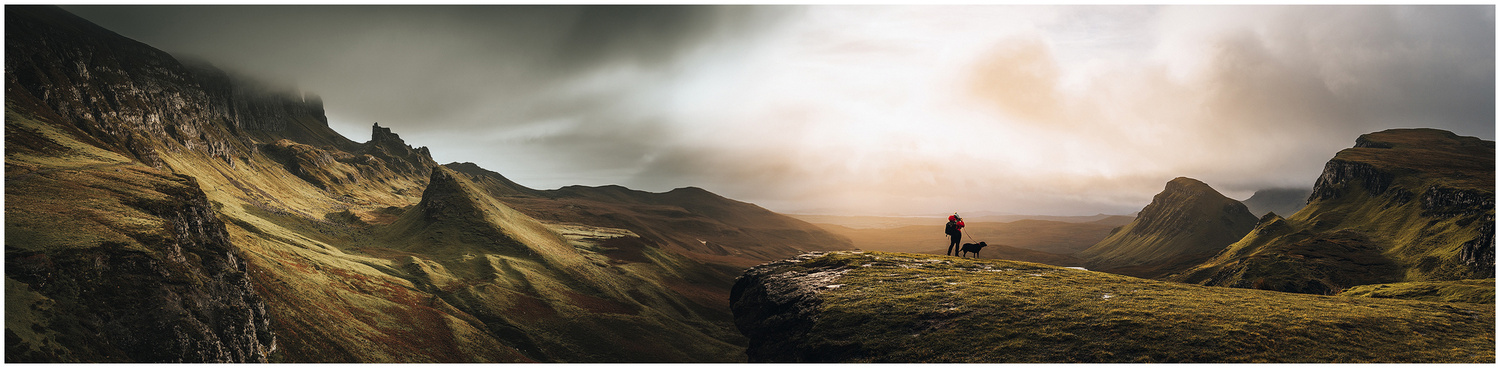

Another fun thing to try is creating new landscapes from existing images. This idea is not mine and belongs to Fstoppers editor Robert K Baggs, but it is so much fun to try. I found myself lost in another world for over an hour trying it, searching through images that might work together and then generating a fill between them. Yes, there were some sights to behold, but with others, it did work well. I did find that similar f-stops worked better together, as you would expect. And a lot of the more successful images were photographed in or around the same location with similar lighting, again, as you would expect. The same angle and elevation of the shot also help to create a more pleasing image. Creating backgrounds for my composited images using my photographs will be sped up exponentially. I'm not one for creating images via prompts, only photographs, but if the tool can speed up my workflow using my images, bring it on.

Positive Ideas

Is it a good or a bad thing, well that is the question and it depends on how you look at it. Basically, AI is here to stay. So, should it not be the case that we learn about how to use it creatively and positively? Yes, there will be those who create images entirely from it and say that they took the photo. To be honest, that's for them to live with, as they are only fooling themselves.

- Can it create images that never existed? Yes.

- Can it lessen the skill level required to use Photoshop? Yes.

- Could it turn anyone into a creative? Yes and no.

- Will it speed up your workflow? A resounding yes.

- Is it a one-stop shop for editing and finishing images? No.

- Is it fun to use? Yes.

- Is it going to change a lot for post-processing? Another resounding yes.

There are a multitude of questions and answers that could be asked here, with a lot of debate for and against it, and the answers above are only my opinion. I didn't want to go into that in this article, as there are plenty on the internet about it. I also wrote one of these articles when Midjourney first reared its head. It's the creative and positive ideas and applications for this that make it stand out, and they are only limited by imagination.

The new Generative Fill AI is only one of the new features to speed up your workflow in Photoshop, and it certainly succeeds at doing so. There are other new features that I will cover in another article that also speed up the post-processing for both photographers and designers. Is Adobe thinking about the end user? Yes. Will these new features change everything? What are your thoughts?

If you would like to see the above examples in action you can check out the video above.

It's here to stay. Ugg. IT's the easy, softer way for me. I need to avoid or I will stop maturing in skills composition, etc. I hope the industry insists on tagging AI, so we all know.

You definitely still need a skill base to use it as it's not a one-stop shop, it only speeds up the process. Plus, if users don't have the skill base how are they going to sort it when something goes wrong with it. I'm already planning ways for next years curriculum to introduce it and show how to correct it as well using traditional methods and tools.

It's here to stay. Yes, I think so, too. It's a question of how to use the AI. In my opinion, Adobe has found an ingenious solution or possibility here. Adobe permits use on a photograph taken. This is definitely an incredible gain in time savings and effectiveness, and the photographer keeps the upper hand. If he applies it gently and correctly, for changes he makes anyway as part of his workflow.

Even in beta form, it's already a game changer for my corporate portrait work. I haven't seen anyone talking about how EASY it makes dealing with reflections. I used to spend so much time cleaning up glare and reflections from clients' glasses, now it's a one click fix. That big AC unit in the background of of the room they insisted on shooting in? Gone.

Only limitation right now is pixel quality, but that can be worked around. Game changing tool and I'm never going back.

Within the video I had included a section for reflections but omitted it so that I could do a video on it. It worked brilliant on everything I tried it on and I see it has for you as well.