Not every brilliant camera technology dies because it's flawed. Sometimes innovations vanish because the market couldn't support their development, manufacturers chose cheaper alternatives, or the industry simply moved on before the technology reached its full potential. Here are three examples of camera technologies that deserved better fates than they received.

Foveon X3 Sensors: The Color Accuracy We're Losing

Walk into any camera store today and you'll find sensors using the Bayer filter array, a technology that has dominated digital photography since its inception. Every modern camera, from your smartphone to flagship DSLRs, relies on this approach, where each pixel captures only one color and sophisticated algorithms guess at the other two. We've become so accustomed to this compromise that we forget it's a compromise at all.

Sigma's Foveon X3 sensor took a radically different approach. Instead of arranging red, green, and blue filters in a mosaic pattern across a single layer, the Foveon stacked three separate layers on top of each other, exploiting silicon's natural property of absorbing different wavelengths of light at different depths. The top layer captured blue light, the middle layer green, and the bottom layer red. This meant every single pixel location captured complete color information without any interpolation, demosaicing, or algorithmic guesswork.

The image quality advantages were immediately obvious to anyone who used one. The Sigma DP series and SD series cameras with the original Merrill-generation sensors produced files with a color purity that Bayer sensors simply couldn't match. Fine details remained sharp without the softening effects of demosaicing algorithms. Color transitions were smooth and natural. Fabrics showed individual threads with remarkable clarity. The sensors excelled particularly with foliage, rendering leaves and grass with a three-dimensional quality that seemed almost tactile. This perceptual sharpness advantage came despite higher noise levels and ISO limitations that restricted their practical use.

But Sigma faced insurmountable engineering challenges. The fundamental physics of the three-layer design made scaling difficult. Light has to pass through the top two layers to reach the bottom red-sensitive layer, which inherently reduces sensitivity. The last true three-layer Foveon sensor arrived with the Merrill generation in 2013. When Sigma attempted to evolve the technology with the Quattro design in 2014, they abandoned true per-pixel color capture, using a pixel grouping that required interpolation for blue and red channels. By 2019, when Sony released their 61-megapixel sensor in the a7R IV, Sigma's technology had effectively stagnated. More critically, the design's light efficiency problems meant high-ISO performance lagged years behind conventional sensors. Professional photographers who needed to shoot in low light or deliver large files for commercial work couldn't justify the limitations, no matter how beautiful the color rendering.The technology hasn't officially died. Sigma has announced a full frame Foveon sensor that faces years of delays and an uncertain future. But the writing seems clear. Sigma, a lens manufacturer first and camera maker second, lacks the resources to solve the engineering problems at the pace the market demands. Had Canon or Sony invested in developing the technology, we might have seen versions that addressed the resolution and ISO limitations. Instead, computational photography and ever-more-sophisticated demosaicing algorithms became the industry's answer to color accuracy. The gap seems to have narrowed enough to no longer justify the other issues.

What remains frustrating is that we never got to see what Foveon could have become with proper development resources. Modern Bayer sensors produce excellent results through clever processing, but they're still making educated guesses about color at every pixel. The original Foveon approach captured actual color information. That's not a minor technical distinction. It's the difference between measuring and estimating, between recording and reconstructing. The market chose good enough over technically superior, and now an entire generation of photographers may never experience what true per-pixel color capture looks like.

Pellicle Mirror Systems: Optical Viewing Without Compromise

The camera industry loves to present technological progression as inevitable, as if each innovation naturally displaces its predecessors through obvious superiority. The evolution of pellicle mirror systems tells a different story, one where the technology transformed significantly but ultimately gave way to solutions that integrated its benefits differently.

A pellicle mirror uses a semi-transparent fixed mirror instead of the moving reflex mirror found in traditional SLRs. Canon pioneered the approach in film cameras with models like the 1989 EOS RT and the professional EOS-1N RS. Light passed through the mirror, with roughly 30 percent reflected up to the optical viewfinder and 70 percent continuing through to the film, resulting in approximately a half-stop of light loss. This eliminated mirror blackout entirely and removed the vibration and mechanical complexity of a moving mirror mechanism. Sports photographers particularly valued the uninterrupted optical view during shooting.

Sony's SLT (Single-Lens Translucent) series, introduced in 2010, evolved the concept dramatically for digital photography. The Alpha a77 and a99 used a translucent mirror to split light between the main imaging sensor and a dedicated phase-detection autofocus sensor. Critically, these cameras used electronic viewfinders fed by the main sensor, not optical finders. The translucent mirror enabled continuous phase-detection autofocus during exposure and video recording, advantages that traditional DSLRs with moving mirrors couldn't match. The SLT design demonstrated that the fixed mirror concept could work brilliantly in digital cameras with EVFs.

The technology faced legitimate criticism in the film era. That half-stop light loss presented real challenges when ISO 400 was considered fast and ISO 1600 was grainy and rarely used. Sony abandoned the SLT design entirely with their transition to true mirrorless cameras. The breakthrough came when they integrated phase-detection pixels directly onto the main imaging sensor, eliminating the need for a separate AF sensor and the translucent mirror that fed it. This solution was more elegant from an engineering perspective, but it also meant abandoning the SLT's continuous AF advantages during the transition period. Modern mirrorless cameras eventually caught up again, but there was a window where the technology regressed.What's frustrating is that Canon's original optical pellicle approach never got the chance to evolve with modern DSLR sensor technology. A DSLR with true optical viewing, zero blackout, and minimal mechanical complexity, combined with modern sensor-based autofocus systems, could offer something distinct. The industry chose to solve the problem differently, moving entirely to electronic viewfinders rather than refining optical solutions. Electronic viewfinders undoubtedly offer advantages like exposure preview and focus magnification, and are the future.

The pellicle approach wasn't abandoned because it failed. Canon stopped making pellicle film cameras as the market shifted to digital. Sony proved the concept could work beautifully in digital cameras but then found a more integrated solution that eliminated the mirror entirely. We moved from one compromise to another, trading mirror blackout for electronic lag, mechanical complexity for power consumption, optical reality for digital approximation. The technology evolved rather than died, but we lost something in the translation.

Modular Camera Systems: When Longevity Lost to Obsolescence

Camera manufacturers face a fundamental tension between building products that last and building products that require frequent replacement. The Hasselblad V-system, produced from 1957 to 2013, represented one extreme of this spectrum. The system's modular design allowed photographers to swap film backs, viewfinders, and focusing screens onto bodies that could remain in service for decades. A photographer could start with a basic setup and gradually expand capabilities, or update just the components that mattered for new projects. Bodies built in the 1970s could accept digital backs made in the 2000s. That kind of longevity created legendary customer loyalty but presented obvious challenges for a company's revenue model.

The modularity extended beyond simple lens interchangeability. Different film backs allowed switching between 120 and 220 film mid-shoot, or between color and black and white without wasting partial rolls. Viewfinders ranged from simple waist-level finders to metered prisms to precision focusing screens. The famous Acute-Matte focusing screens provided exceptional clarity and focusing precision for critical work. Focusing screens themselves were user-swappable, allowing customization for different focal lengths or shooting styles. The system's mechanical interfaces were designed with such precision that components from different decades could work together seamlessly. This approach created cameras that photographers viewed as long-term investments rather than consumable technology.

The transition to digital photography exposed both the strengths and vulnerabilities of modularity. Hasselblad, Phase One, and others produced digital backs compatible with existing V-system bodies, allowing the 503CW and similar models to remain relevant well into the digital age. But digital technology evolved at a pace that mechanical systems never faced. Sensor technology, processing power, and connectivity standards changed rapidly enough that backs became obsolete before bodies wore out. The modular design meant photographers could upgrade sensors without replacing entire systems, but it also meant carrying separate batteries, dealing with multiple firmware versions, and accepting communication speeds limited by decades-old interface designs.

But the industry may have discarded modularity too completely. The environmental impact of complete camera replacement every few years deserves consideration, even if photography's footprint remains smaller than smartphones or computers. Electronic waste from obsolete cameras represents both a disposal challenge and a loss of embodied manufacturing energy. From a user perspective, the upgrade cycle creates constant pressure to replace functioning equipment. A modular approach focused on electronic rather than mechanical interfaces could address some of these concerns. Imagine a camera body where you could upgrade the sensor module independently, or swap between different types of electronic viewfinders, or update the processor board without replacing the entire chassis.

Some manufacturers have experimented with limited modularity. The RED cinema camera system uses interchangeable sensor modules. Phase One continues to produce genuinely modular medium-format systems with their XF and XT cameras, where backs, viewfinders, and grips remain interchangeable in the V-system tradition. But these remain expensive niche products rather than mainstream practice. The industry has largely settled on integrated designs with two to three year replacement cycles as the most profitable approach. Even lens mount compatibility, once sacred, has seen major manufacturers transition to entirely new systems. Canon moved from EF to RF. Nikon shifted from F to Z. These changes were driven by optical and electronic requirements, and in fairness, it was time for them, but they also demonstrate that even the most established forms of modularity eventually yield to integrated redesigns.

The death of truly modular camera systems reflects priorities that have little to do with image quality or user needs. Integrated designs are cheaper to manufacture at scale, easier to weather-seal, and create predictable upgrade cycles that support quarterly earnings reports. Modularity requires precision engineering, long-term parts support, and commitment to backwards compatibility. The V-system's five-decade production run seems increasingly like an anomaly. Hasselblad itself moved on to the H-system and eventually the X-series, which maintains some modularity but nothing approaching the V-system's comprehensive approach. We've accepted that cameras are disposable technology rather than lasting tools, not because modularity failed but because disposability proved more profitable.

Conclusion

These three technologies share a common thread. None died because they were technically inferior. Foveon sensors produced more accurate color. Pellicle mirrors eliminated blackout and vibration. Modular cameras lasted decades instead of years. They struggled or vanished because markets, manufacturing realities, and business models couldn't support their continued development in their original forms. Sometimes the best technology loses not because it deserves to, but because good enough proves easier to scale, market, and profit from. The camera industry's history isn't just a story of progress. It's also a catalog of promising roads not taken, innovations abandoned before they reached maturity, and technical excellence sacrificed for commercial convenience.

20 Comments

Rotating backs! My Mamiya RB67 could rotate the back to switch between landscape and portrait without putting all the camera controls at a weird angle. Nowadays everyone is taking vertical shots for Instagram but our cameras are still designed to be used primarily horizontally.

and GPS! Cameras like the Nikon D5300 from 2013 had GPS built-in so your photos could appear on a map, and it was SUPER useful! It actually worked, unlike the smartphone apps that claim to tag your photos.

My 4x5 has a rotating film holder too for the same reason. I let the light and subject matter dictate vertical or horizontal composition framing not an internet app. And my GPS is built-in my head I don't need a camera or a phone to tell me where I was.

As for Sigma's Foveon Sensors I hope it's not been scrubbed for good, love to work with it sometime. After all the layering of the sensor colors made sense to me being that's how film emulsions are DUH !!!

Foveon? Had its chance, died.

Sigma says they're still working on a FF version, but has not even guessed at a release date.

Interchangeable parts is what interchangeable lenses is... isn't it? I mean, it kind of is. I can't see a new sensor being easy to install into a camera with IBIS and all that. Plus, it would be pretty easy to mess that up.

Interchangeable parts? They can't even do interchangeable batteries (3 for Sony's, 4 for Nikon's, and 6 for Canon's current lines - assuming Google is correct).

The Foveon sensor seems like it would be a perfect match for Hassleblad.

Tom, how so ?

Because one of the perceptions that Hassy has is to be all about image quality ... that may be an accurate perception or it may not be, but nonetheless that is what some photographers assume when they see the Hassleblad name. And Foveon seems to be about image quality. So for a brand that has a perception of prioritizing image quality to use a technology that is all about premium color gathering, seems to me to be a natural, or at least a sensible, pairing.

Thanks Tom, I never gave much thought to Hasselblad other than being a studio camera, and one that made it to space.

As for the Foveon sensor as mentioned earlier always intrigued with the layering of the colors, but alway curious as to the chosen order of blue, green, red [B top R bottom]. Curious you and others maybe wondering, if you are familiar with Scuba, and or underwater photography you first colors lost below 1 atmosphere are Red/yellow, 2 atmosphere Green, below 3 atmosphere everything is primarily blue. To me it seems only natural to layer sensor colors RED/Green/Blue.

That is very interesting. Until I read this article, I had never realized that there were these challenges to the Foveon technology. I'd not realized that there was light loss on the bottom layers. Nor realized that ISO performance was so much worse than conventional sensors.

I wonder ..... if someone developed sensor technology in which each pixel had its very own color filter array, would that help to overcome some of these light-gathering and image quality difficulties? I mean I realize taht it would be insanely expensive - like probably hundreds of billions of dollars - to create such a radical sensor system. But in a fantasy world, if such a thing was able to be made, would it solve some of the problems?

Paul, you pose a very good question about the order of the colors. The technical details are fairly complex, but I will try to explain it

The order of the colors is determined by the physics of the semiconductor used in the sensor. The sensors are photon detectors. A photon of sufficient energy dislodges an electron from silicon atoms in the material, which creates a current. If a photon is of insufficient energy, it will not dislodge an electron and the photon will pass through the detector. The energy of a photon is proportional to its frequency, which is the inverse of the wavelength. A photon on the blue end of the spectrum has shorter wavelength, which gives it a higher frequency and thus higher energy, than a photon of red light. The wavelength of green light is between blue and red.

The detectors in stacked pixel sensors (such as the Foveon) are doped to be sensitive to certain wavelengths of light. A blue detector will not detect a photon at the wavelength of red light because there is insufficient energy in the photon to displace an electron. Therefore, the photon of red light will pass through the blue sensor. But the converse is not true. A blue photon has sufficient energy to displace an electron in the red sensor, so it will not pass through. The order of the stack is determined by the wavelengths to be detected. So, a red photon will pass through the blue sensor and green sensor and be sensed by the red detector. A green photon will pass through the blue sensor and be detected by the green sensor. A blue photon would be detected by the top blue sensor.

If the red sensor was on the top of the stack, every photon of light in the spectrum from red to blue would have sufficient energy to displace electrons in the red sensor. So, no photons of green or blue wavelength would pass through the red sensor to the sensors below. No photons would make it to the green or blue sensors. Therefore, the order of the stack has to be shortest wavelength (blue) on top, mid wavelengh (green) in the middle, and longest wavelength (red) on the bottom.

You may wonder why the colors don't get mixed in each pixel in a Bayer sensor.. The reason is that the Bayer sensors and stacked pixel sensors have a fundamental difference. Each pixel in a Bayer sensor is identical and is doped to detect the full spectrum of visible wavelengths. Optical filters are then used to filter out all but the desired photon wavelength for the designated color of that pixel. The stacked detectors can't have optical filters. As stated above the doping of the silicon is used to provide the wavelength filtering (aka spectral responsibility). But due to the physics of the semiconductor, the spectral responsibility can be thought of as "one-sided", meaning that it will only "filter out" longer wavelengths (It doesn't actually filter the photons, they pass through unimpeded). The full range of shorter wavelengths are still detected. So, the order of the stack is important because the only way to "filter" the shorter wavelengths is to make sure the detector above will absorb the photon.

Interesting.

Ai, thanks so much for all your information and your energy putting it together. It's a lot to take in but I'll do my best.

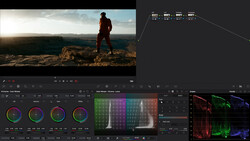

What does the lead photo have to do with the story? Are the Canon DSLR and tripod examples are technologies that didn't die before their time?

That is a very good question. I would like to think that each and every photo used in an Fstoppers article is carefully chosen because of its relevance to the subject of the article. I want a photo to be something that visually supports the text, not just somethign filling space.

It showcases camera technologies that died before they should have, but they’re not in the photo because they died before they should have.

So the cameras were there but then the random stock photo model took their place :)

Had to fill that space with something!

Great article, I worked for Hasselblad for 38 years as a service technician and recall a demonstration in their offices in Wembley of a prototype Hasselblad using a Foveon chip, but it was not to be! It was probably in the 2000s (https://www.ins-news.com/en/100/645/1132/Hasselblad-and-Foveon-Partners…) .